Part II. Installation

Virtualization installation topics

Table of Contents

Chapter 5. Installing the virtualization packages

yum command and the Red Hat Network (RHN).

5.1. Installing KVM with a new Red Hat Enterprise Linux installation

Need help installing?

- Start an interactive Red Hat Enterprise Linux installation from the Red Hat Enterprise Linux Installation CD-ROM, DVD or PXE.

- You must enter a valid installation number when prompted to receive access to the virtualization and other Advanced Platform packages.

- Complete the other steps up to the package selection step.

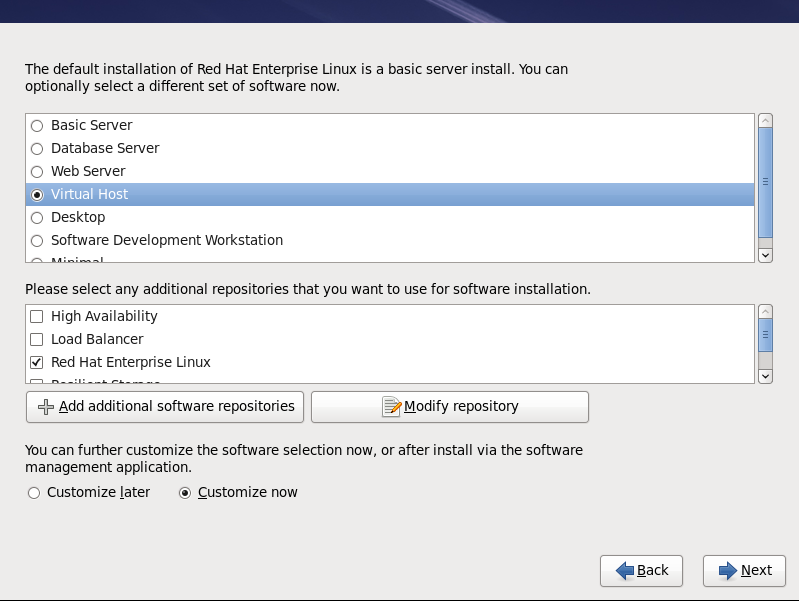

Select the Virtual Host server role to install a platform for virtualized guests. Alternatively, select the Customize Now radio button to specify individual packages.

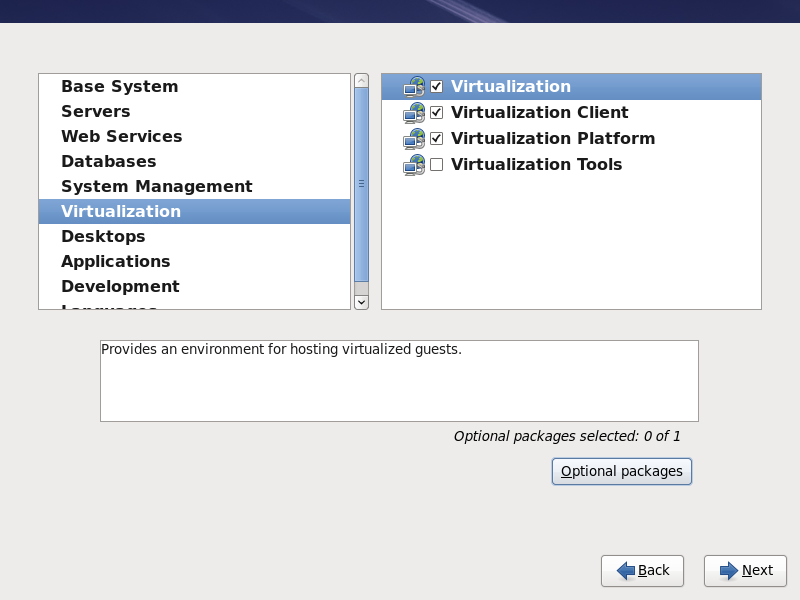

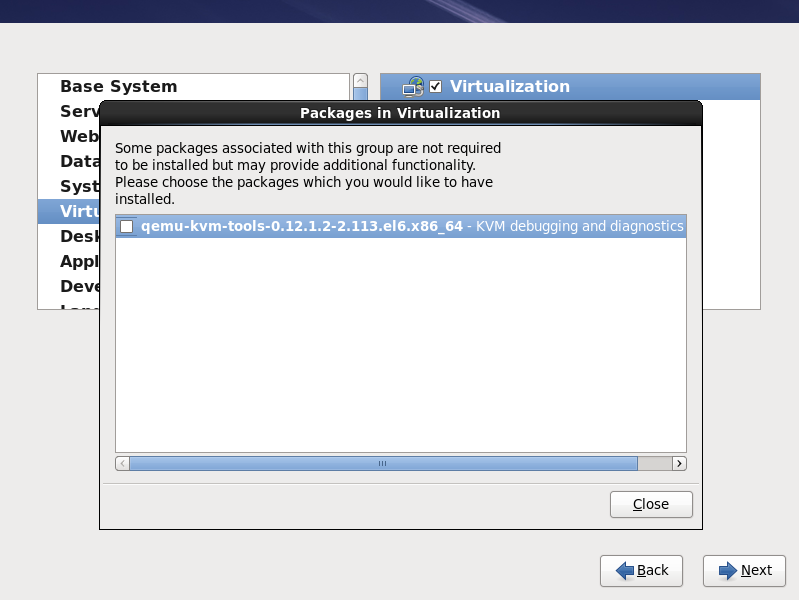

Select the Virtual Host server role to install a platform for virtualized guests. Alternatively, select the Customize Now radio button to specify individual packages. - Select the Virtualization package group. This selects the KVM hypervisor,

virt-manager,libvirtandvirt-viewerfor installation.

Customize the packages (if required)

Customize the Virtualization group if you require other virtualization packages. Press the Close button then the Next button to continue the installation.

Press the Close button then the Next button to continue the installation.

Note

Installing KVM packages with Kickstart files

This section describes how to use a Kickstart file to install Red Hat Enterprise Linux with the KVM hypervisor packages. Kickstart files allow for large, automated installations without a user manually installing each individual system. The steps in this section will assist you in creating and using a Kickstart file to install Red Hat Enterprise Linux with the virtualization packages.%packages section of your Kickstart file, append the following package group:

%packages @kvm

5.2. Installing KVM packages on an existing Red Hat Enterprise Linux system

Adding packages to your list of Red Hat Network entitlements

This section describes how to enable Red Hat Network (RHN) entitlements for the virtualization packages. You need these entitlements enabled to install and update the virtualization packages on Red Hat Enterprise Linux. You require a valid Red Hat Network account in order to install virtualization packages on Red Hat Enterprise Linux.rhn_register command and follow the prompts.

Procedure 5.1. Adding the Virtualization entitlement with RHN

- Log in to RHN using your RHN username and password.

- Select the systems you want to install virtualization on.

- In the System Properties section the present systems entitlements are listed next to the Entitlements header. Use the link to change your entitlements.

- Select the checkbox.

Installing the KVM hypervisor with yum

To use virtualization on Red Hat Enterprise Linux you require the kvm package. The kvm package contains the KVM kernel module providing the KVM hypervisor on the default Red Hat Enterprise Linux kernel.

kvm package, run:

# yum install kvm

Recommended virtualization packages:

- python-virtinst

- Provides the

virt-installcommand for creating virtual machines. - libvirt

- The libvirt package provides the server and host side libraries for interacting with hypervisors and host systems. The libvirt package provides the

libvirtddaemon that handles the library calls, manages virtualizes guests and controls the hypervisor. - libvirt-python

- The libvirt-python package contains a module that permits applications written in the Python programming language to use the interface supplied by the libvirt API.

- virt-manager

virt-manager, also known as Virtual Machine Manager, provides a graphical tool for administering virtual machines. It uses libvirt-client library as the management API.- libvirt-client

- The libvirt-client package provides the client-side APIs and libraries for accessing libvirt servers. The libvirt-client package includes the

virshcommand line tool to manage and control virtualized guests and hypervisors from the command line or a special virtualization shell.

# yum install virt-manager libvirt libvirt-python python-virtinst libvirt-client

Chapter 6. Virtualized guest installation overview

virt-install. Both methods are covered by this chapter.

- Red Hat Enterprise Linux 5.

- Para-virtualized Red Hat Enterprise Linux 6 on Red Hat Enterprise Linux 5: Chapter 8, Installing Red Hat Enterprise Linux 6 as a para-virtualized guest on Red Hat Enterprise Linux 5

- Red Hat Enterprise Linux 6: Chapter 7, Installing Red Hat Enterprise Linux 6 as a virtualized guest

- Microsoft Windows operating systems: Chapter 9, Installing a fully-virtualized Windows guest

6.1. Virtualized guest prerequisites and considerations

- Performance

- Input/output requirements and types of input/output.

- Storage.

- Networking and network infrastructure.

- Guest load and usage for processor and memory resources.

6.2. Creating guests with virt-install

virt-install command to create virtualized guests from the command line. virt-install is used either interactively or as part of a script to automate the creation of virtual machines. Using virt-install with Kickstart files allows for unattended installation of virtual machines.

virt-install tool provides a number of options one can pass on the command line. To see a complete list of options run:

$ virt-install --help

virt-install man page also documents each command option and important variables.

qemu-img is a related command which may be used before virt-install to configure storage options.

--vnc option which opens a graphical window for the guest's installation.

Example 6.1. Using virt-install to install a RHEL 5 guest

- Uses LVM partitioning

- Is a plain QEMU guest

- Uses virtual networking

- Boots from PXE

- Uses VNC server/viewer

# virt-install \ --network network:default \ --name rhel5support --ram=756\ --file=/var/lib/libvirt/images/rhel5support.img \ --file-size=6 --vnc --cdrom=/dev/sr0

man virt-install for more examples.

6.3. Creating guests with virt-manager

virt-manager, also known as Virtual Machine Manager, is a graphical tool for creating and managing virtualized guests.

Procedure 6.1. Creating a virtualized guest with virt-manager

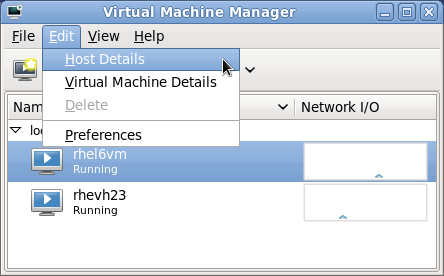

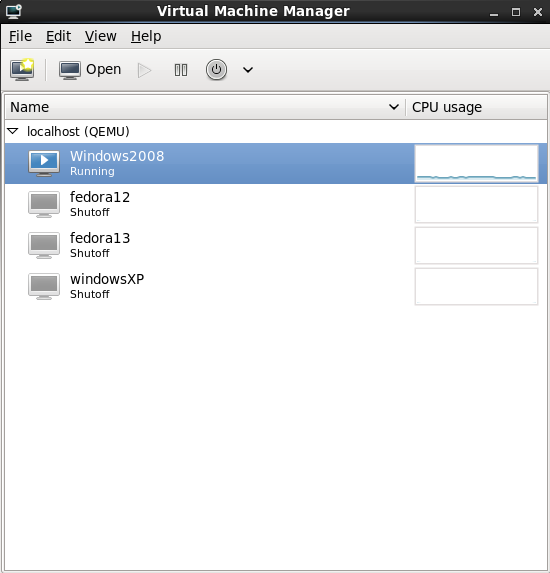

Open virt-manager

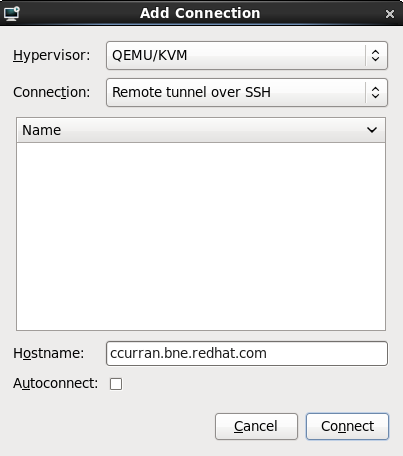

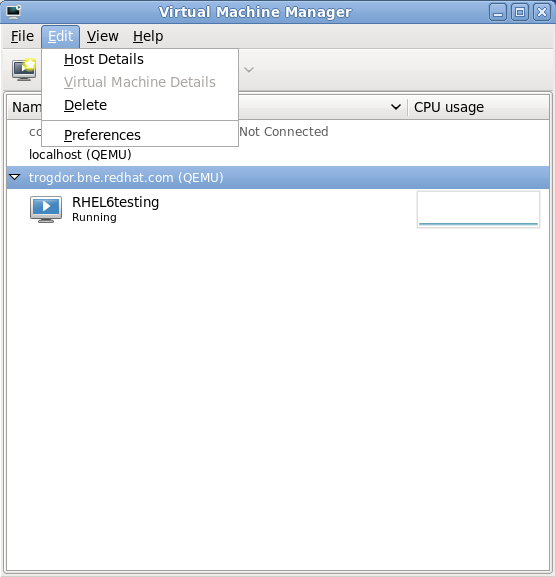

Startvirt-manager. Launch the application from the menu and submenu. Alternatively, run thevirt-managercommand as root.Optional: Open a remote hypervisor

Select the hypervisor and press the button to connect to the remote hypervisor.Create a new guest

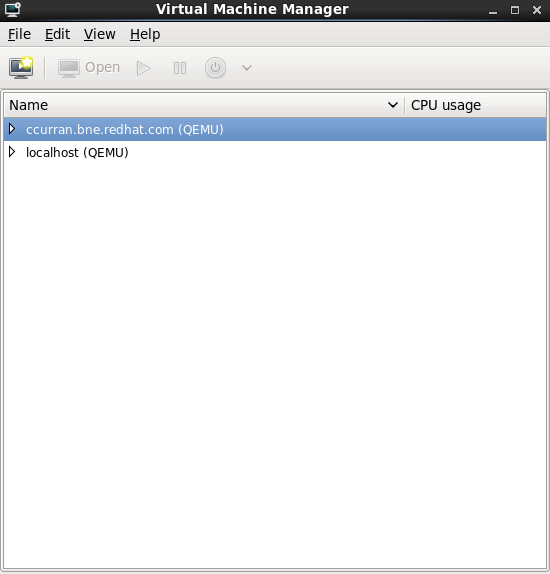

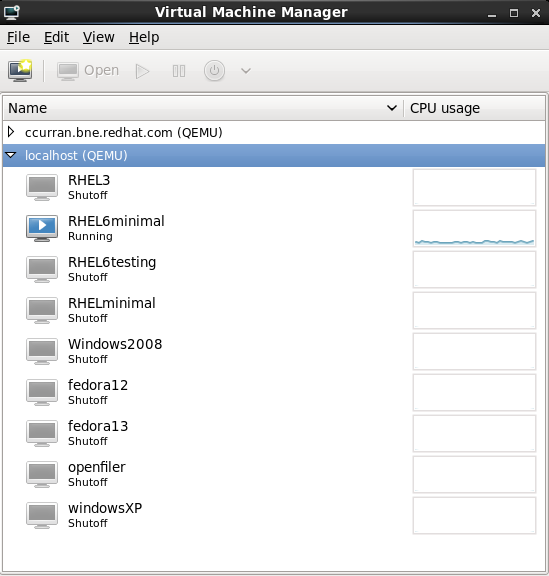

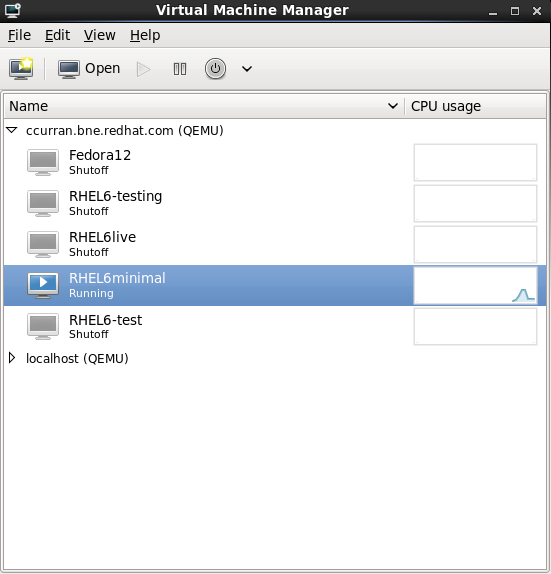

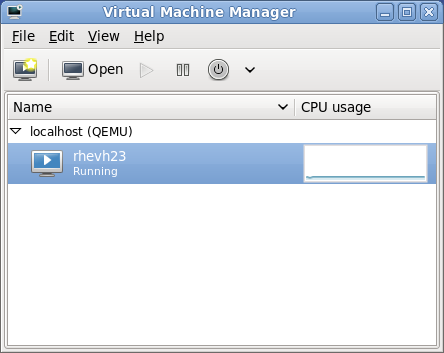

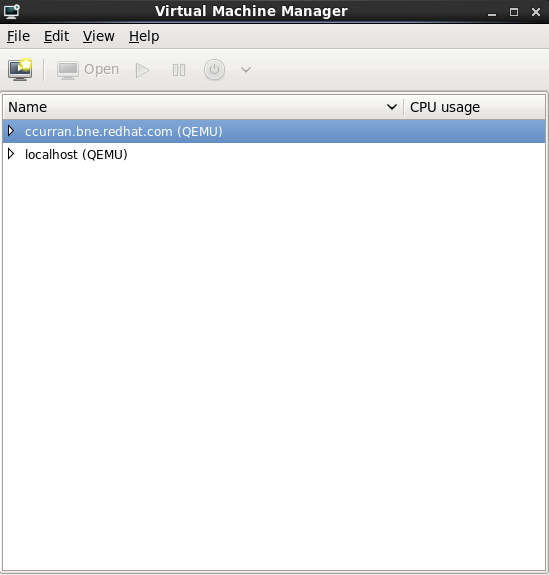

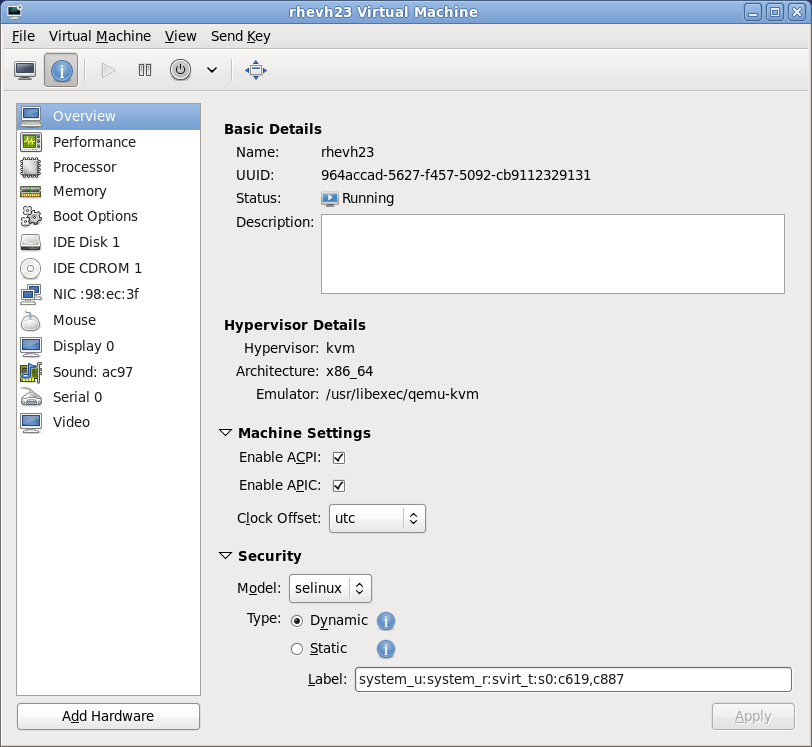

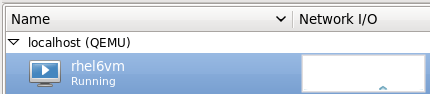

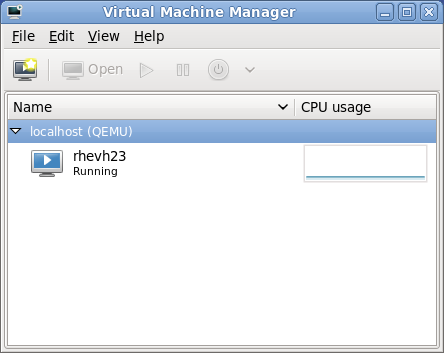

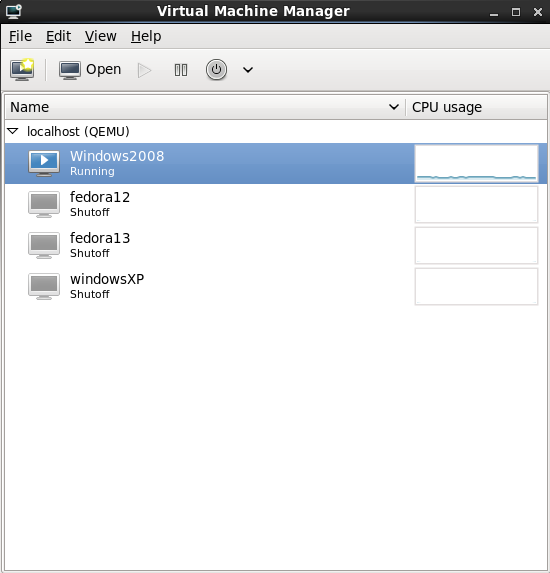

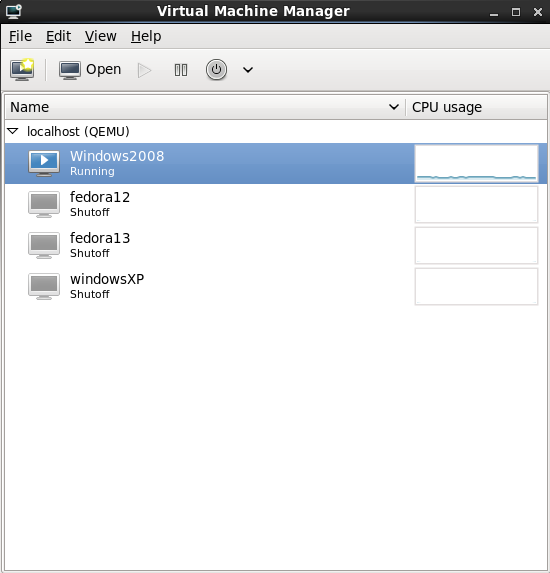

The virt-manager window allows you to create a new virtual machine. Click the button (Figure 6.1, “Virtual Machine Manager window”) to open the wizard.

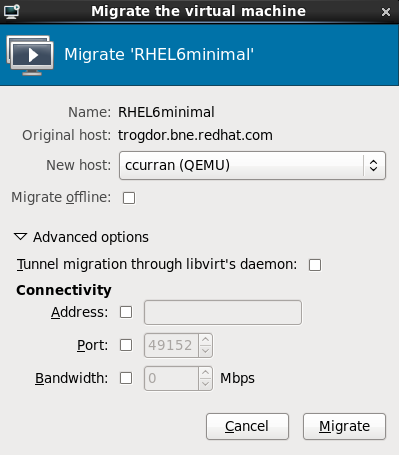

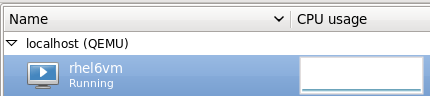

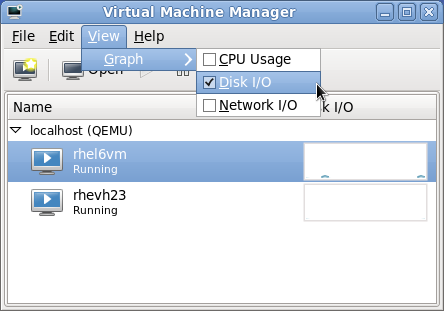

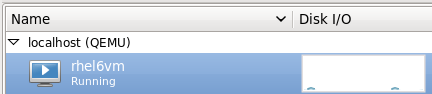

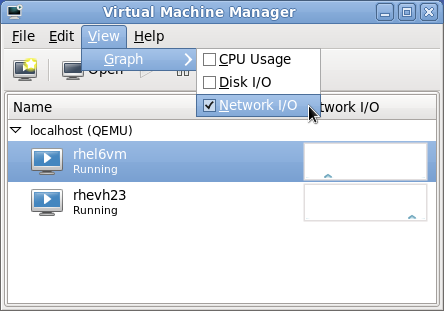

Figure 6.1. Virtual Machine Manager window

New VM wizard

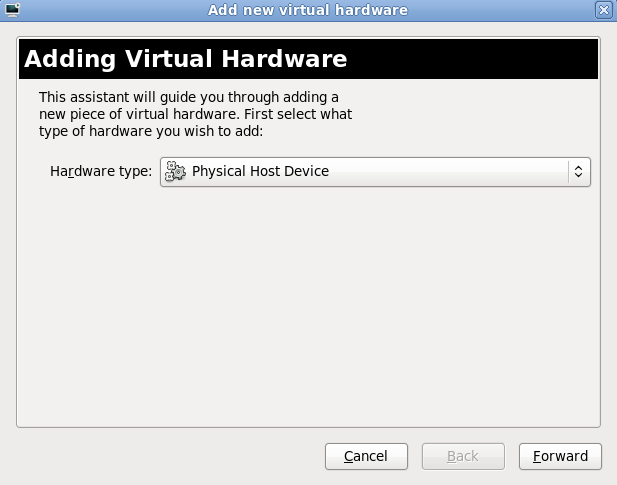

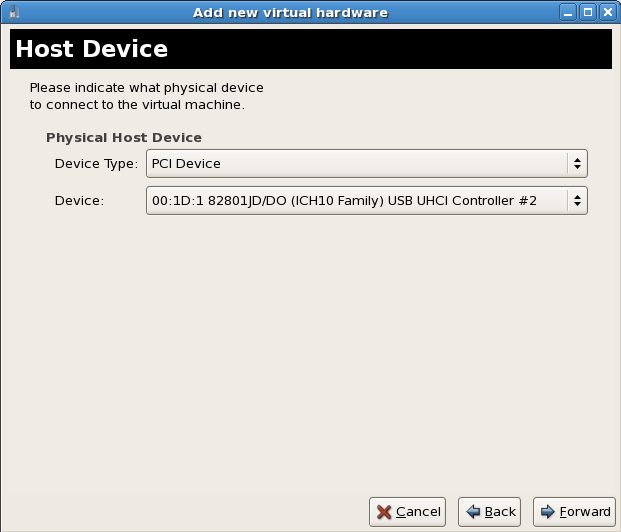

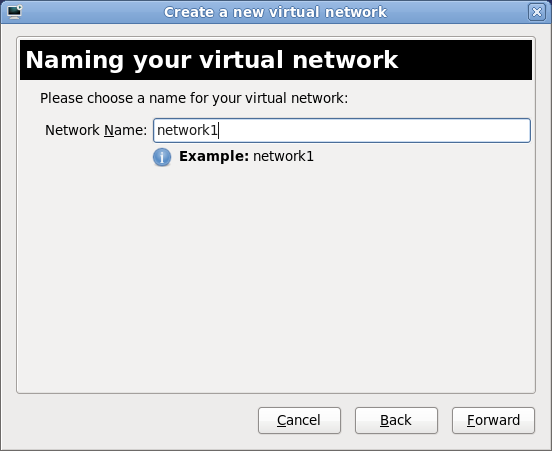

The wizard breaks down the guest creation process into five steps:- Naming the guest and choosing the installation type

- Locating and configuring the installation media

- Configuring memory and CPU options

- Configuring the guest's storage

- Configuring networking, hypervisor type, architecture, and other hardware settings

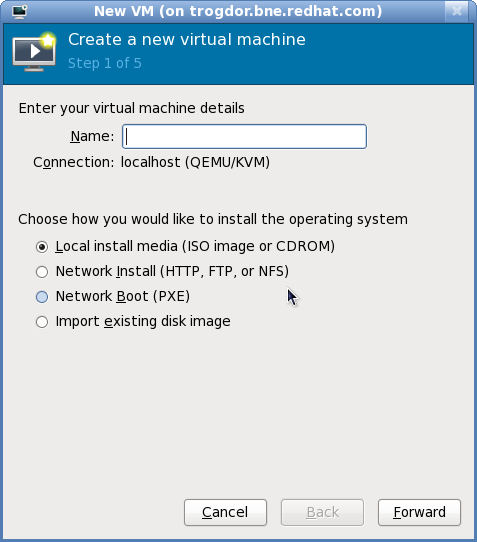

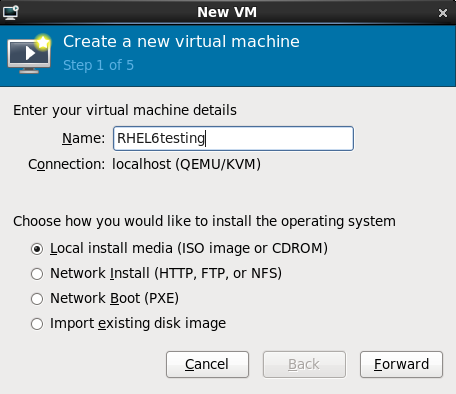

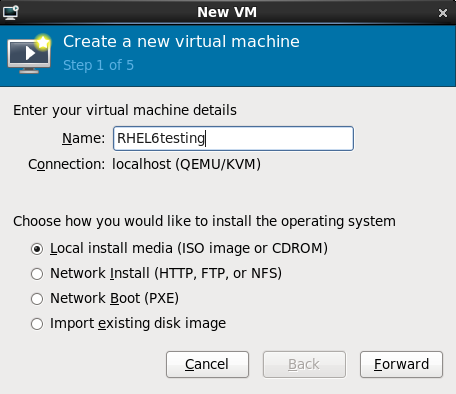

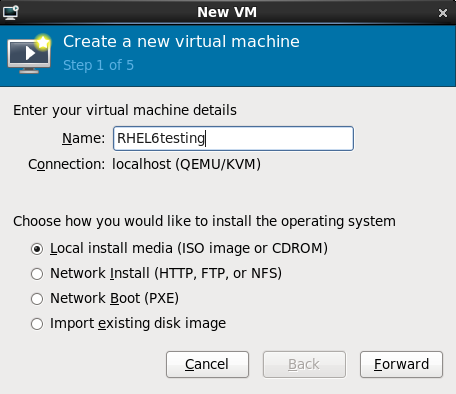

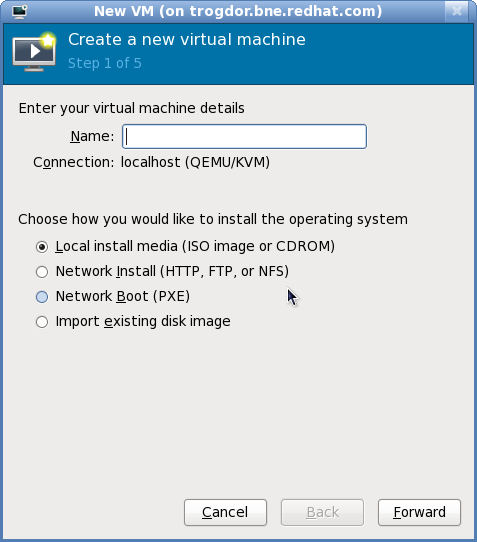

Ensure thatvirt-managercan access the installation media (whether locally or over the network).Specify name and installation type

The guest creation process starts with the selection of a name and installation type. Virtual machine names can have underscores (_), periods (.), and hyphens (-).

Figure 6.2. Step 1

Type in a virtual machine name and choose an installation type:- Local install media (ISO image or CDROM)

- This method uses a CD-ROM, DVD, or image of an installation disk (e.g.

.iso). - Network Install (HTTP, FTP, or NFS)

- Network installing involves the use of a mirrored Red Hat Enterprise Linux or Fedora installation tree to install a guest. The installation tree must be accessible through either HTTP, FTP, or NFS.

- Network Boot (PXE)

- This method uses a Preboot eXecution Environment (PXE) server to install the guest. Setting up a PXE server is covered in the Deployment Guide. To install via network boot, the guest must have a routable IP address or shared network device. For information on the required networking configuration for PXE installation, refer to Chapter 10, Network Configuration.

- Import existing disk image

- This method allows you to create a new guest and import a disk image (containing a pre-installed, bootable operating system) to it.

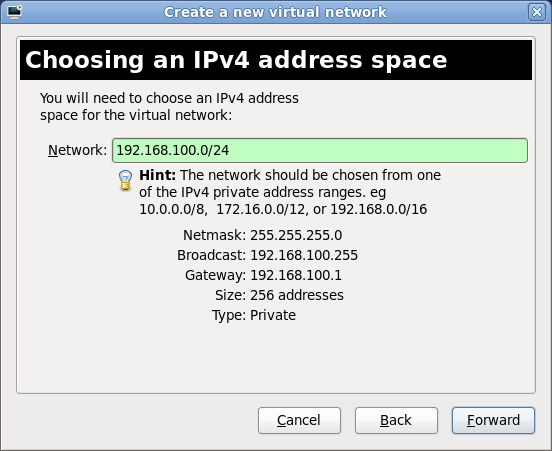

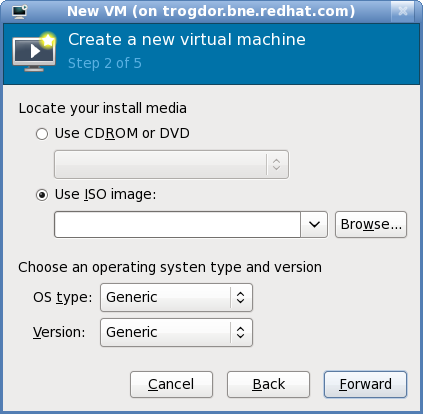

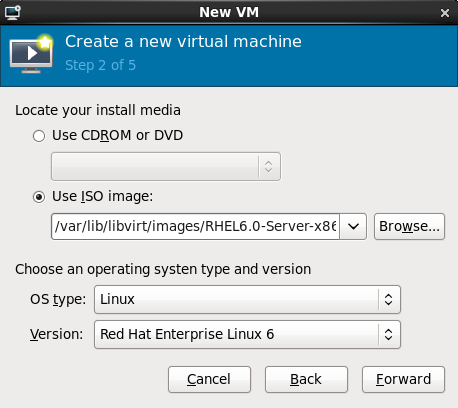

Click to continue.Configure installation

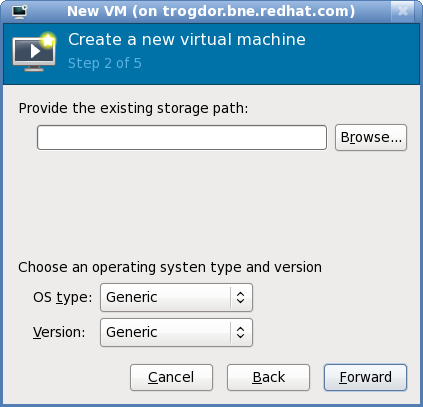

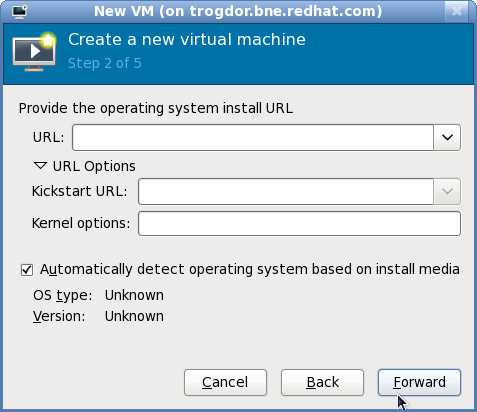

Next, configure the and of the installation. Except for network booting, this step also requires further configuration (depending on your chosen installation method). When using local install media or importing an existing disk image, you need to specify the location of the installation media or disk image.

Figure 6.3. Local install media (configuration)

Figure 6.4. Import existing disk image (configuration)

Important

It is recommend that you use the default directory for virtual machine images,/var/lib/libvirt/images/. If you are using a different location, make sure it is added to your SELinux policy and relabeled before you continue with the installation. Refer to Section 16.2, “SELinux and virtualization” for details on how to do this.When performing a network install, you need to specify the URL of the installation tree. You can also specify the URL of any kickstart files you want to use, along with any kernel options you want to pass during installation.

Figure 6.5. Network Install (configuration)

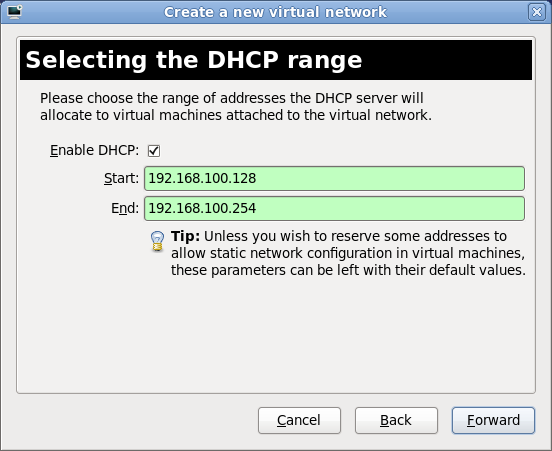

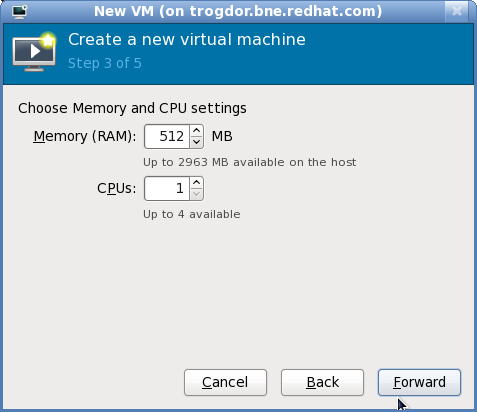

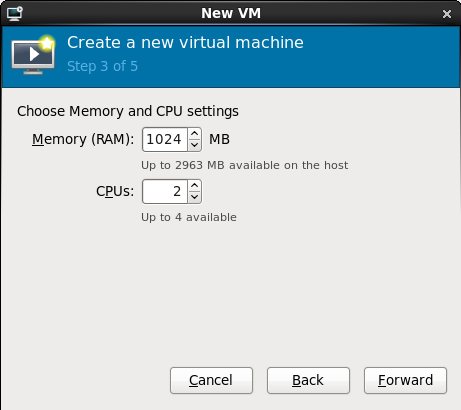

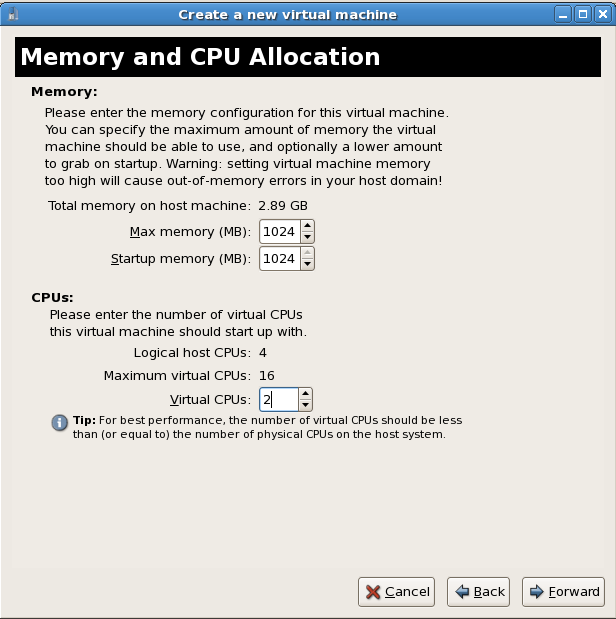

Click to continue.Configure CPU and memory

The next step involves configuring the number of CPUs and amount of memory to allocate to the virtual machine. The wizard shows the number of CPUs and amount of memory you can allocate; configure these settings and click .

Figure 6.6. Configuring CPU and Memory

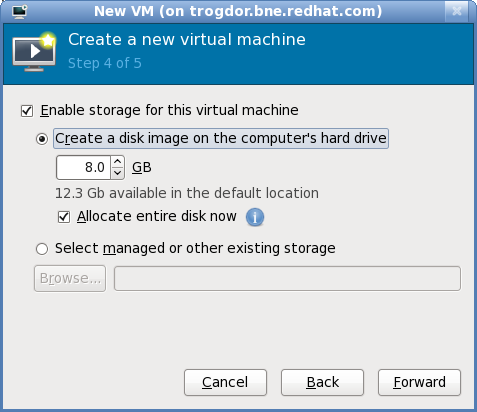

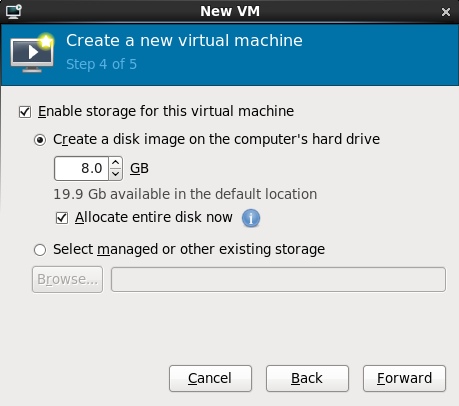

Configure storage

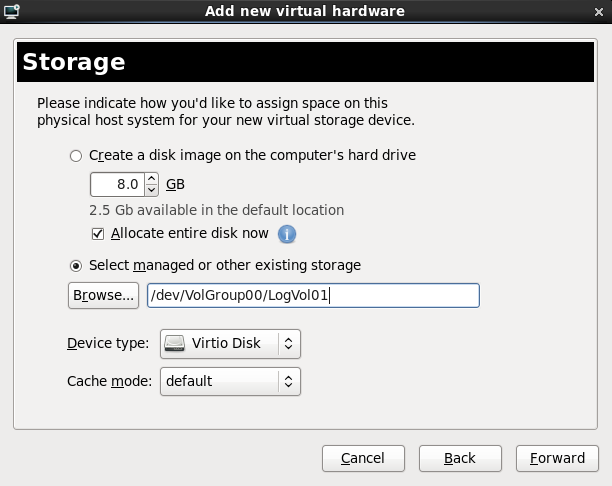

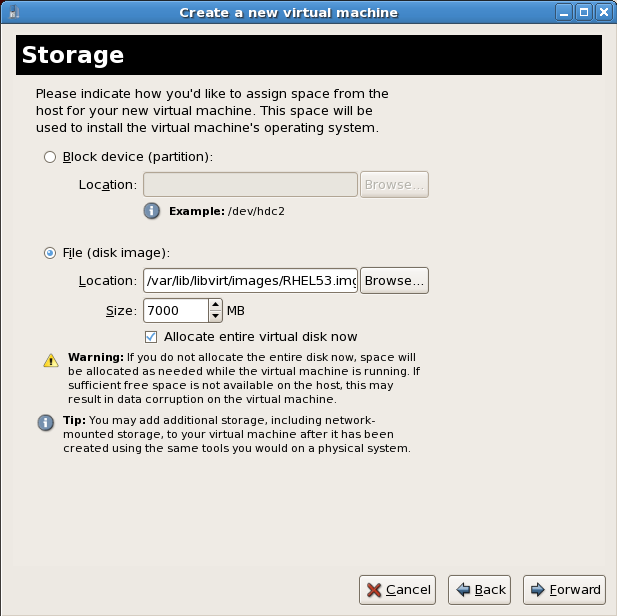

Assign a physical storage device (Block device) or a file-based image (File). File-based images should be stored in/var/lib/libvirt/images/to satisfy default SELinux permissions.

Figure 6.7. Configuring virtual storage

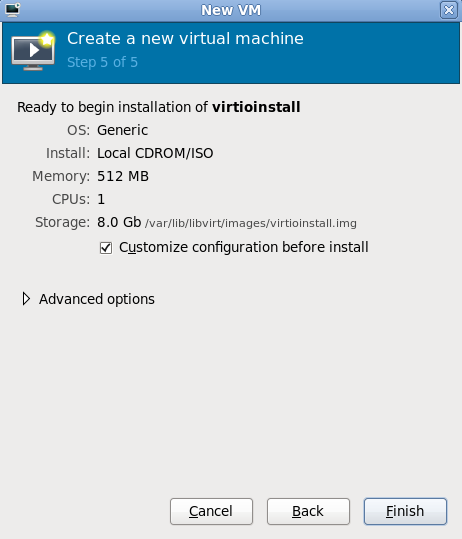

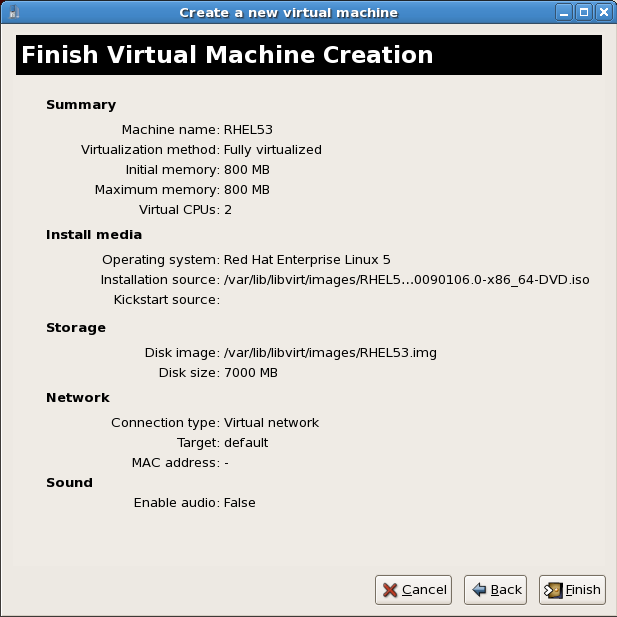

If you chose to import an existing disk image during the first step,virt-managerwill skip this step.Assign sufficient space for your virtualized guest and any applications the guest requires, then click to continue.Final configuration

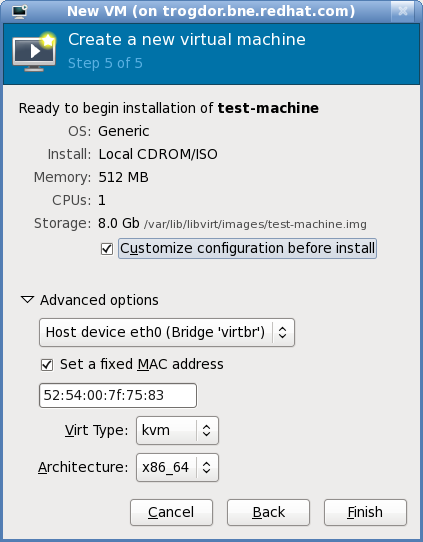

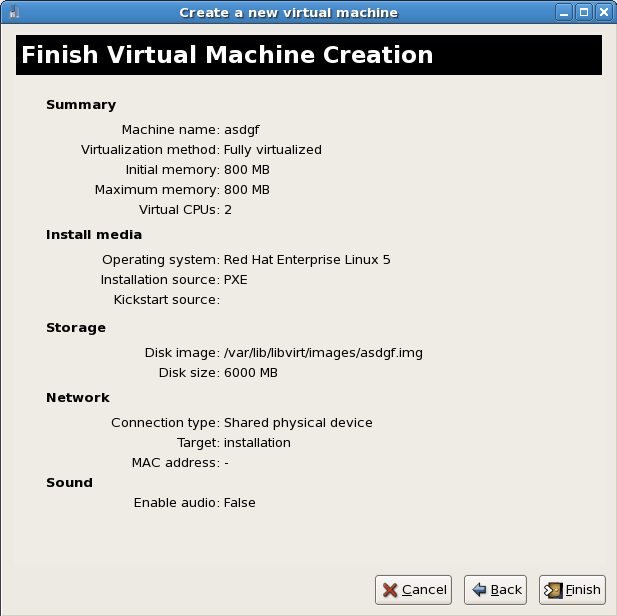

Verify the settings of the virtual machine and click when you are satisfied; doing so will create the guest with default networking settings, virtualization type, and architecture.

Figure 6.8. Verifying the configuration

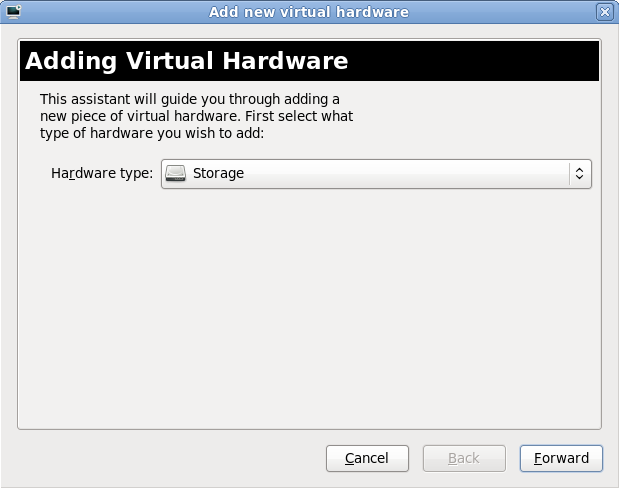

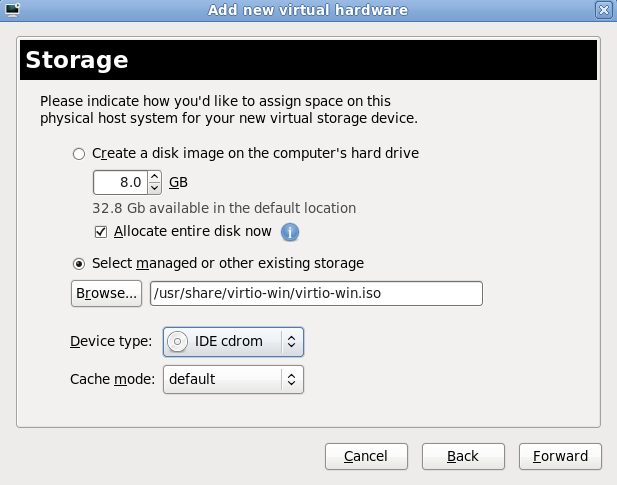

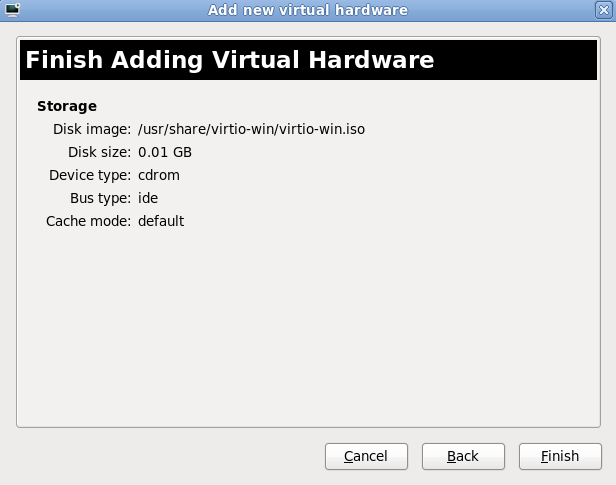

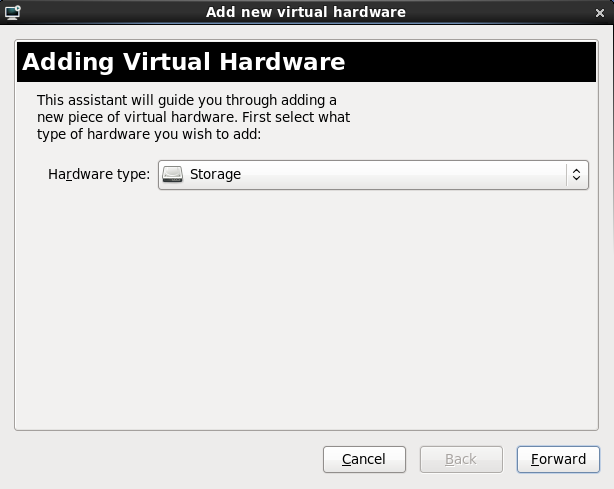

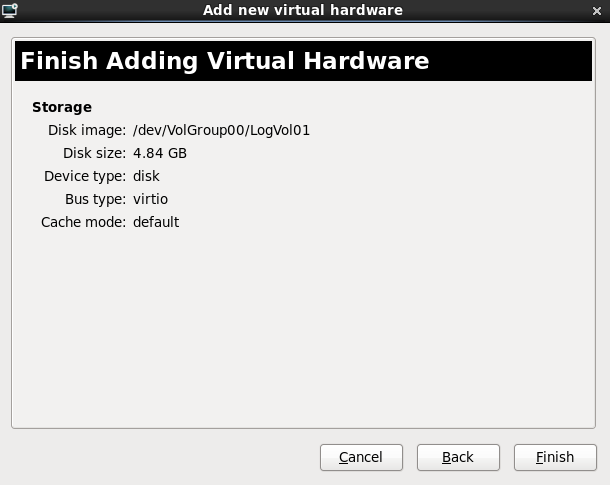

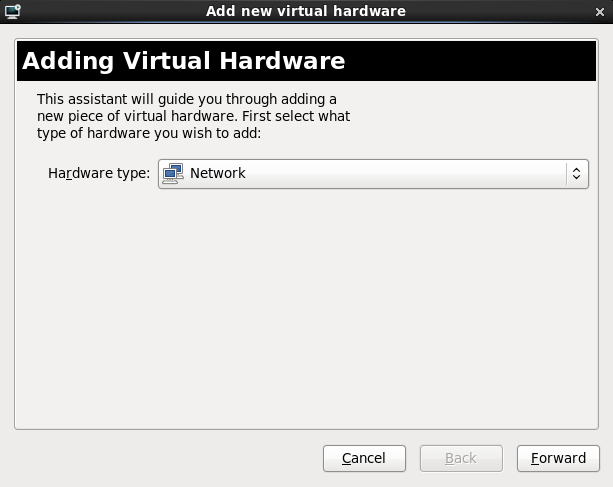

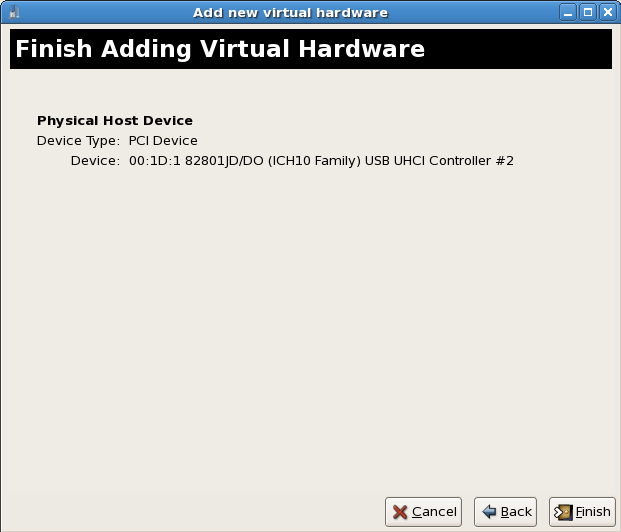

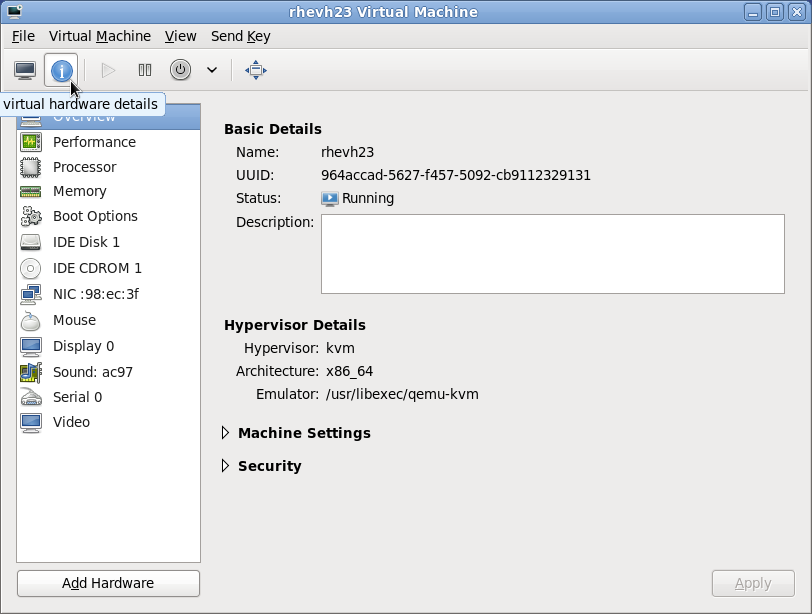

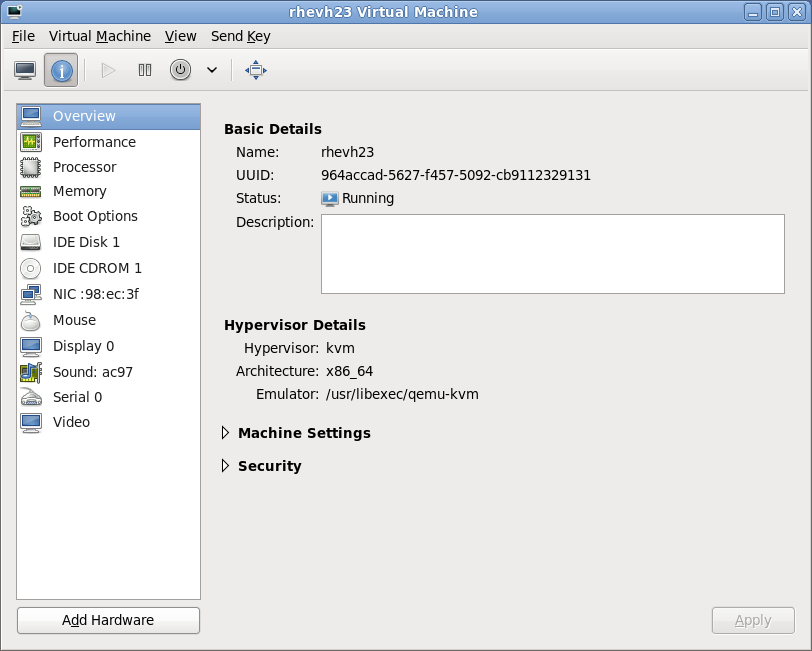

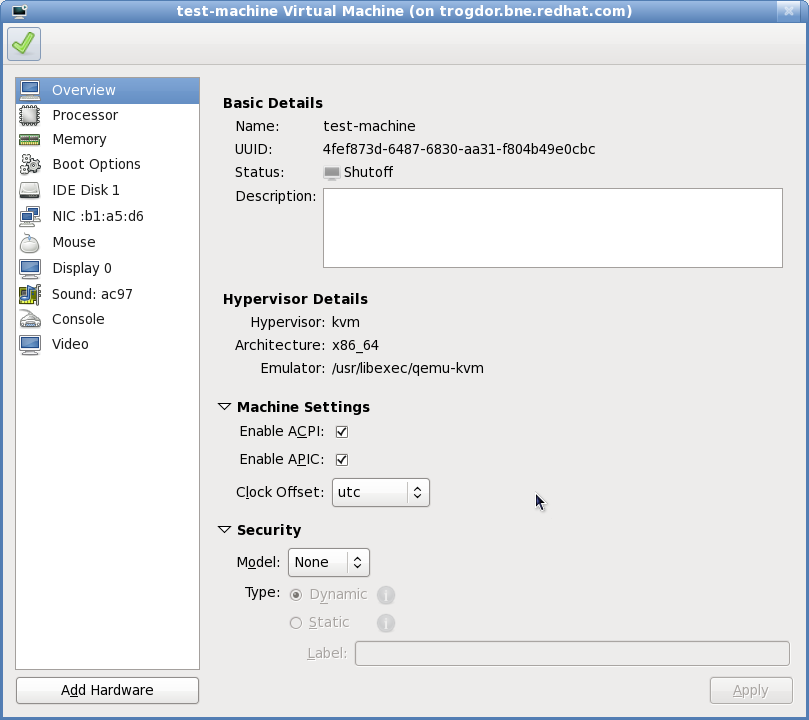

If you prefer to further configure the virtual machine's hardware first, check the box first before clicking . Doing so will open another wizard Figure 6.9, “Virtual hardware configuration” that will allow you to add, remove, and configure the virtual machine's hardware settings.

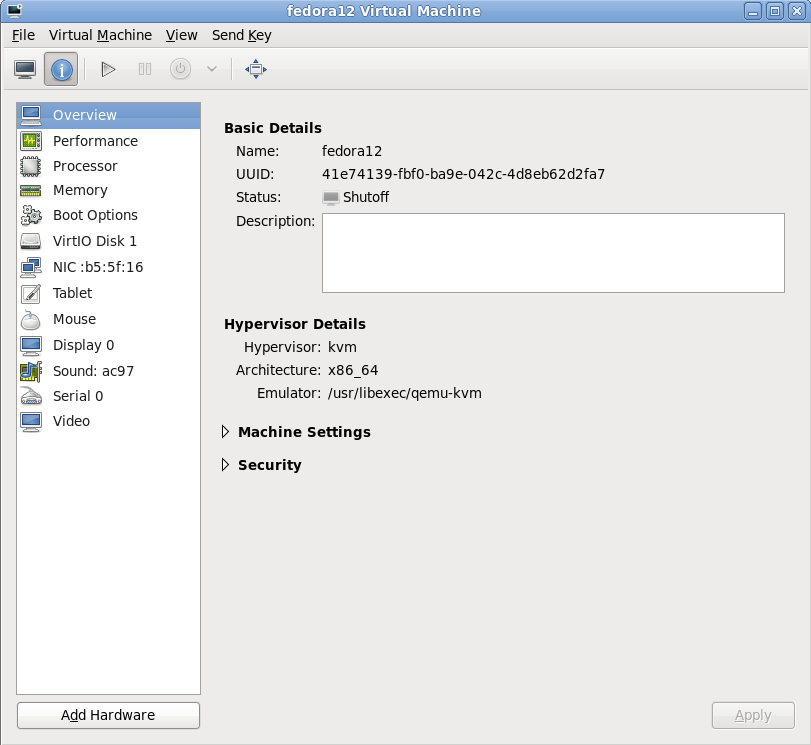

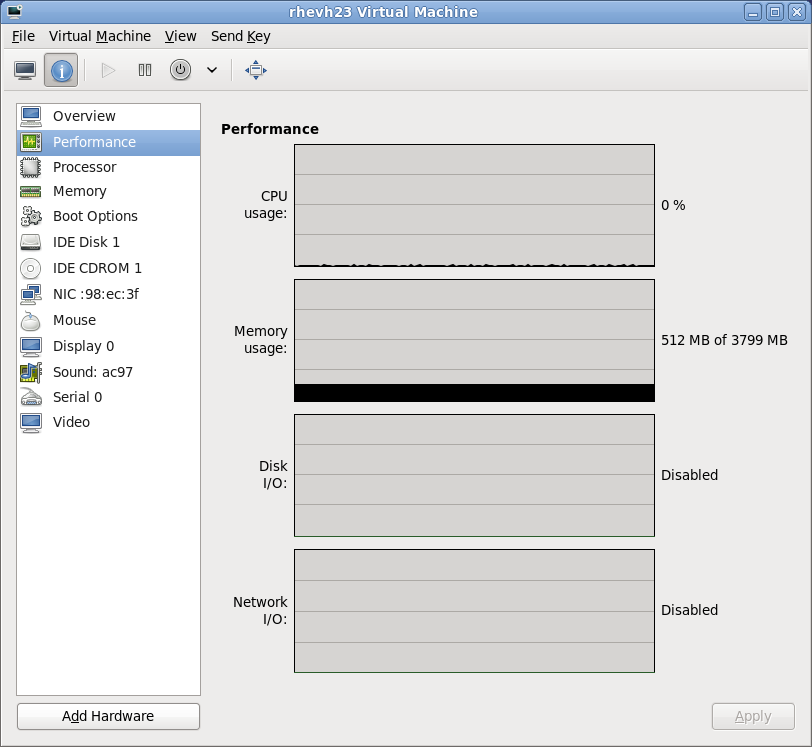

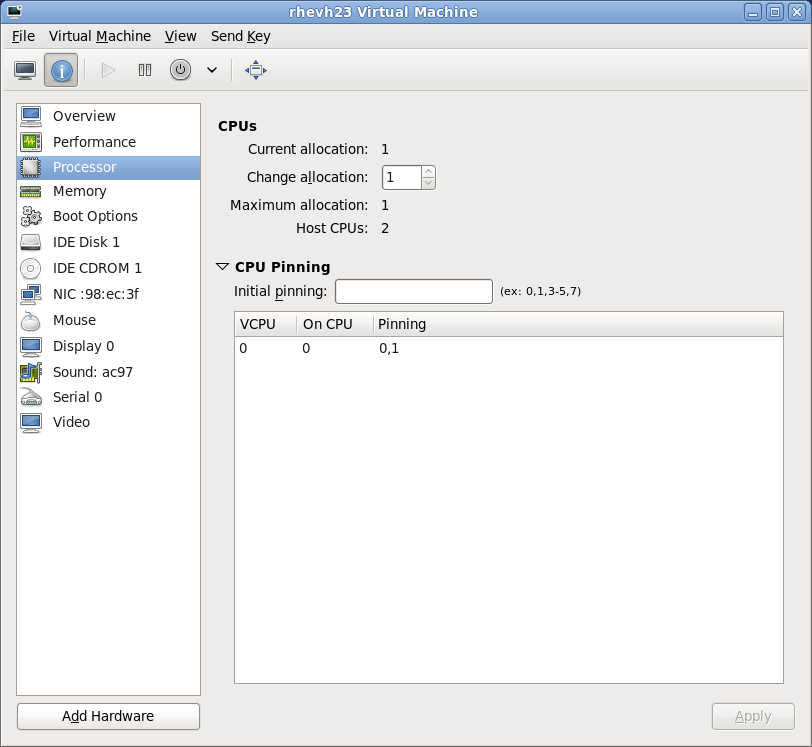

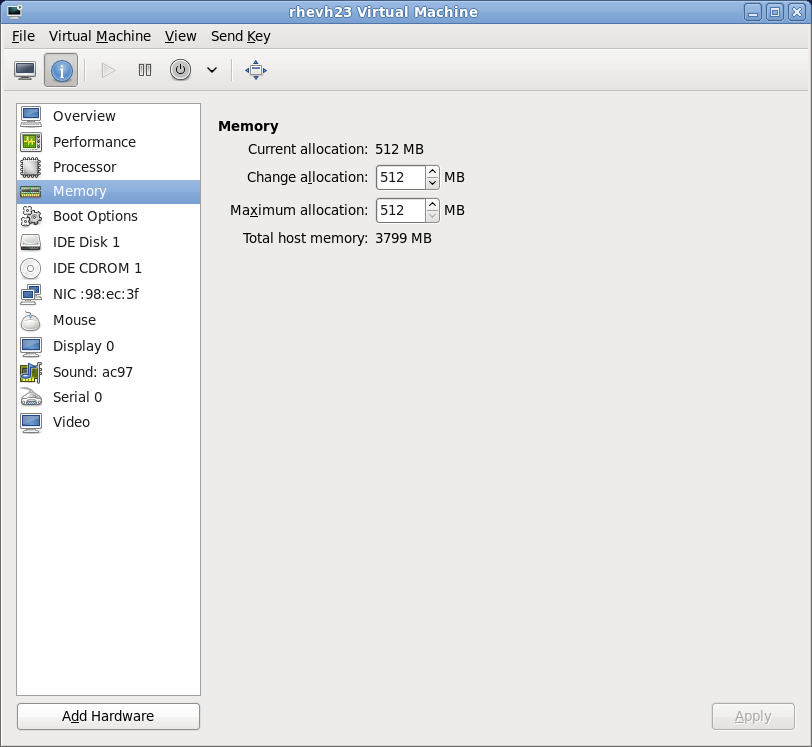

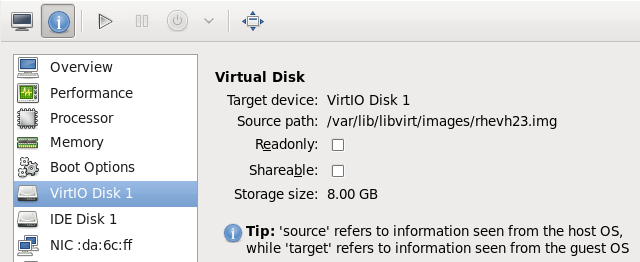

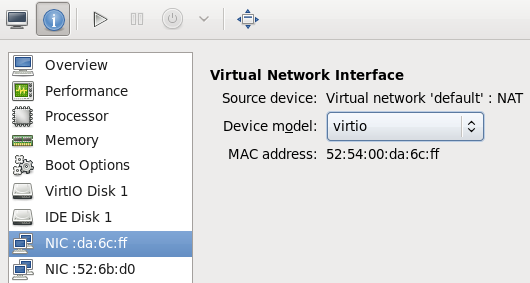

Figure 6.9. Virtual hardware configuration

After configuring the virtual machine's hardware, click .virt-managerwill then create the guest with your specified hardware settings.

virt-manager. Chapter 6, Virtualized guest installation overview contains step-by-step instructions to installing a variety of common operating systems.

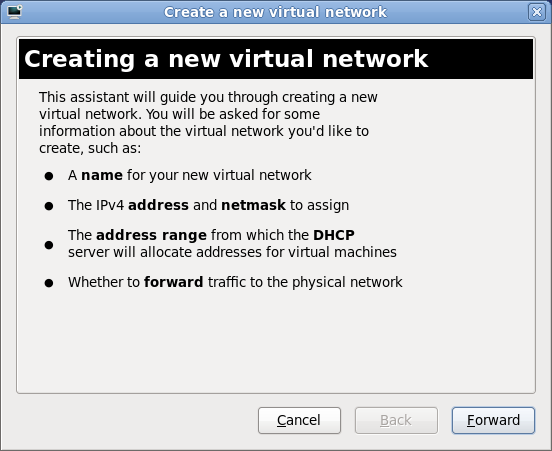

6.4. Installing guests with PXE

Create a new bridge

- Create a new network script file in the

/etc/sysconfig/network-scripts/directory. This example creates a file namedifcfg-installationwhich makes a bridge namedinstallation.# cd /etc/sysconfig/network-scripts/ # vim ifcfg-installation DEVICE=installation TYPE=Bridge BOOTPROTO=dhcp ONBOOT=yes

Warning

The line,TYPE=Bridge, is case-sensitive. It must have uppercase 'B' and lower case 'ridge'. - Start the new bridge by restarting the network service. The

ifup installationcommand can start the individual bridge but it is safer to test the entire network restarts properly.# service network restart

- There are no interfaces added to the new bridge yet. Use the

brctl showcommand to view details about network bridges on the system.# brctl show bridge name bridge id STP enabled interfaces installation 8000.000000000000 no virbr0 8000.000000000000 yes

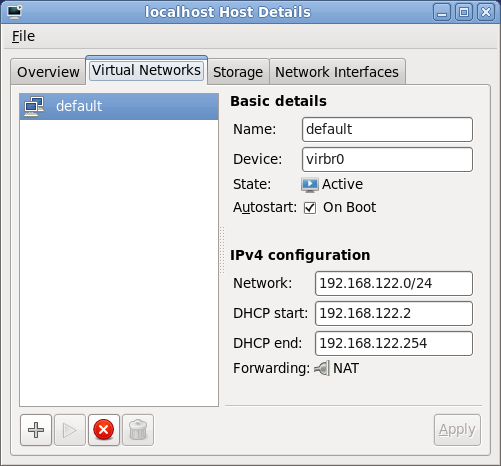

Thevirbr0bridge is the default bridge used bylibvirtfor Network Address Translation (NAT) on the default Ethernet device.

Add an interface to the new bridge

Edit the configuration file for the interface. Add theBRIDGEparameter to the configuration file with the name of the bridge created in the previous steps.# Intel Corporation Gigabit Network Connection DEVICE=eth1 BRIDGE=installation BOOTPROTO=dhcp HWADDR=00:13:20:F7:6E:8E ONBOOT=yes

After editing the configuration file, restart networking or reboot.# service network restart

Verify the interface is attached with thebrctl showcommand:# brctl show bridge name bridge id STP enabled interfaces installation 8000.001320f76e8e no eth1 virbr0 8000.000000000000 yes

Security configuration

Configureiptablesto allow all traffic to be forwarded across the bridge.# iptables -I FORWARD -m physdev --physdev-is-bridged -j ACCEPT # service iptables save # service iptables restart

Disable iptables on bridges

Alternatively, prevent bridged traffic from being processed byiptablesrules. In/etc/sysctl.confappend the following lines:net.bridge.bridge-nf-call-ip6tables = 0 net.bridge.bridge-nf-call-iptables = 0 net.bridge.bridge-nf-call-arptables = 0

Reload the kernel parameters configured withsysctl.# sysctl -p /etc/sysctl.conf

Restart libvirt before the installation

Restart thelibvirtdaemon.# service libvirtd reload

PXE installation with virt-install

Forvirt-install append the --network=bridge:installation installation parameter where installation is the name of your bridge. For PXE installations use the --pxe parameter.

Example 6.2. PXE installation with virt-install

# virt-install --hvm --connect qemu:///system \

--network=bridge:installation --pxe\

--name EL10 --ram=756 \

--vcpus=4

--os-type=linux --os-variant=rhel5

--file=/var/lib/libvirt/images/EL10.img \

PXE installation with virt-manager

The steps below are the steps that vary from the standardvirt-manager installation procedures.

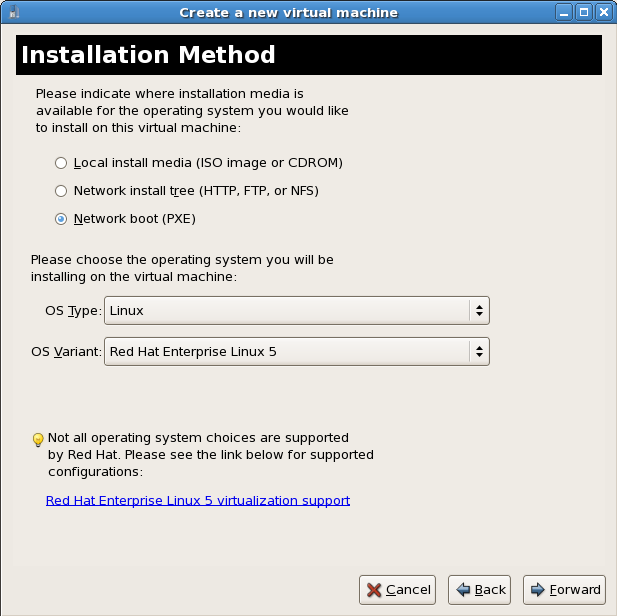

Select PXE

Select PXE as the installation method.

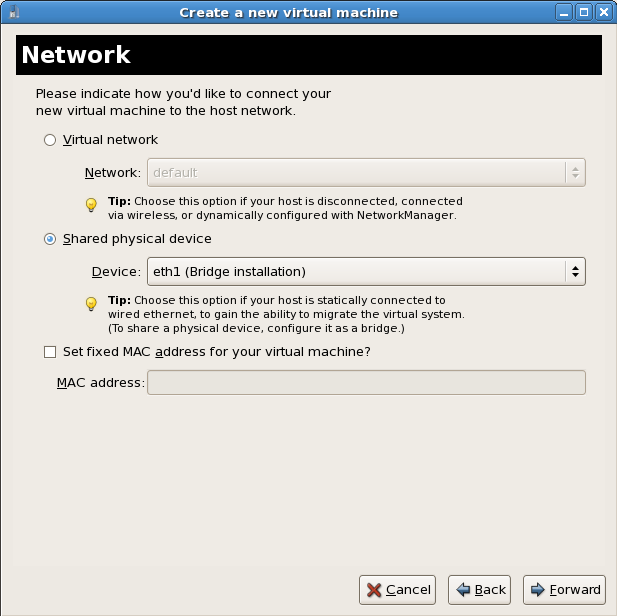

Select the bridge

Select Shared physical device and select the bridge created in the previous procedure.

Start the installation

The installation is ready to start.

Chapter 7. Installing Red Hat Enterprise Linux 6 as a virtualized guest

7.1. Creating a Red Hat Enterprise Linux 6 guest with local installation media

Procedure 7.1. Creating a Red Hat Enterprise Linux 6 guest with virt-manager

Optional: Preparation

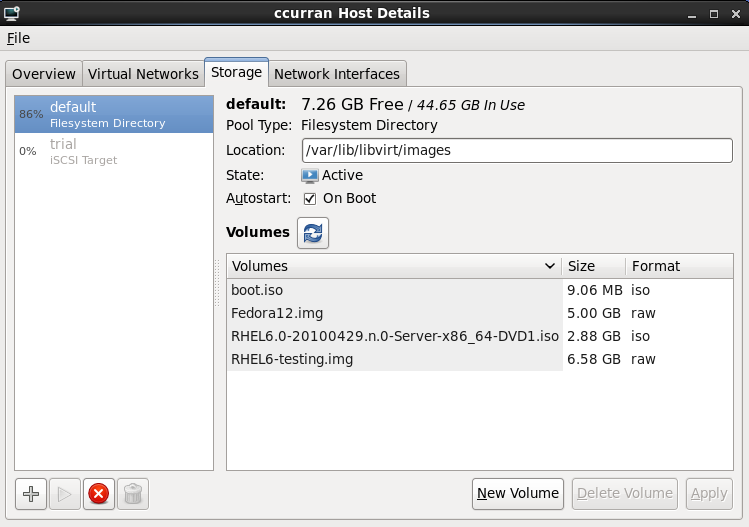

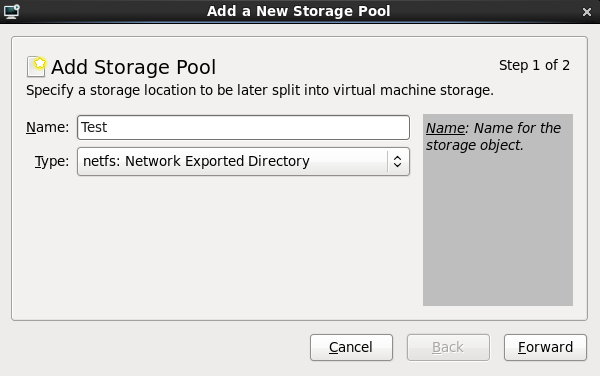

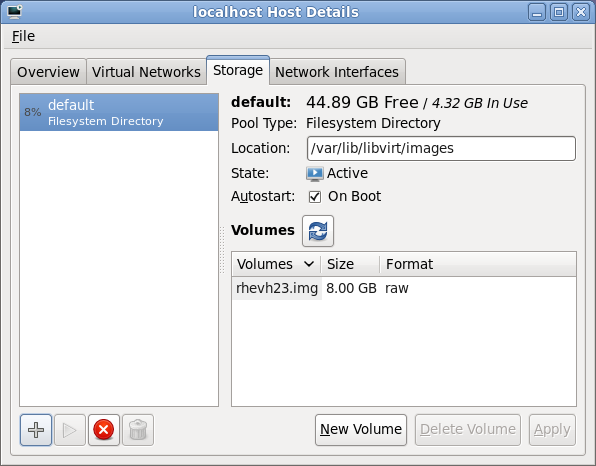

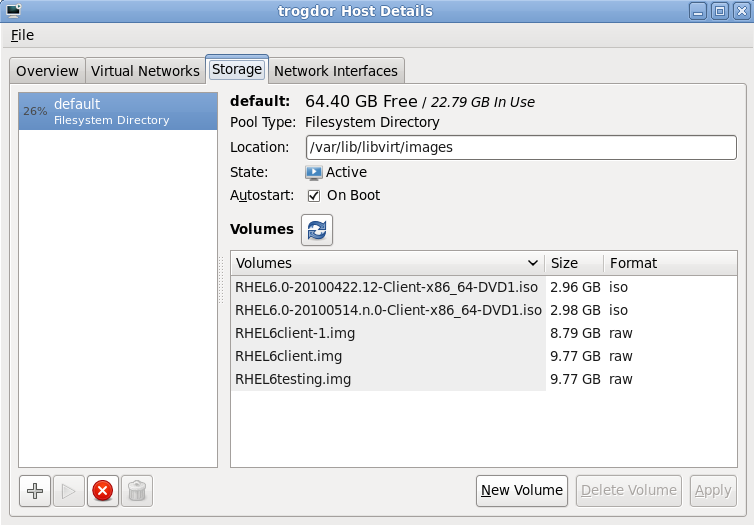

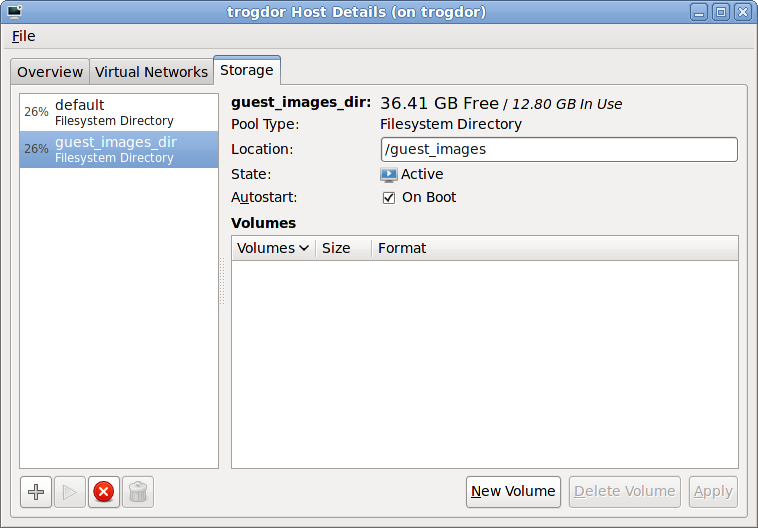

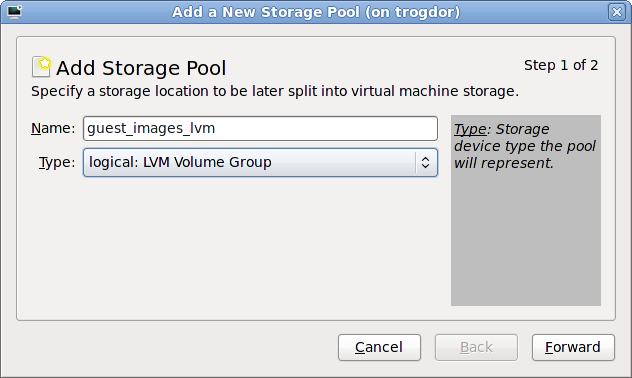

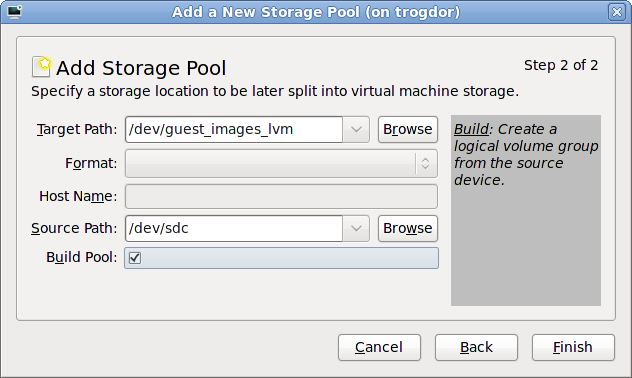

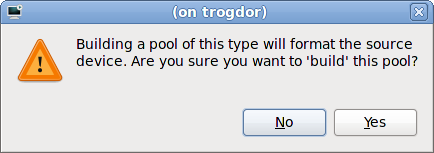

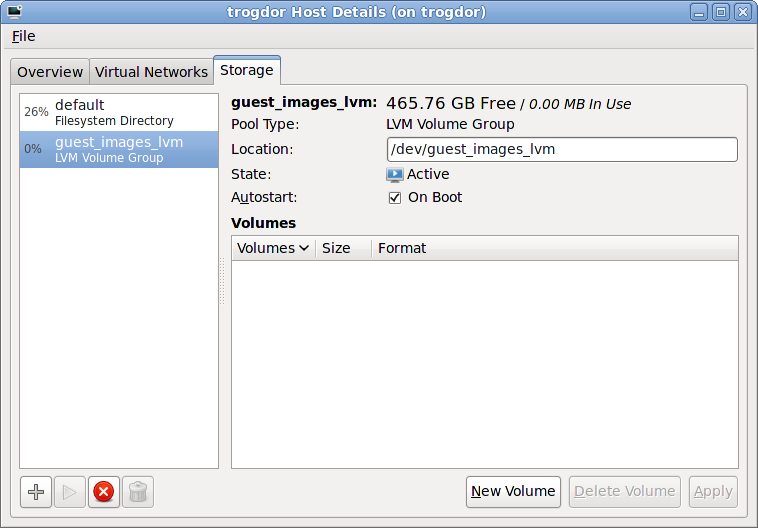

Prepare the storage environment for the virtualized guest. For more information on preparing storage, refer to Part V, “Virtualization storage topics”.Note

Various storage types may be used for storing virtualized guests. However, for a guest to be able to use migration features the guest must be created on networked storage.Red Hat Enterprise Linux 6 requires at least 1GB of storage space. However, Red Hat recommends at least 5GB of storage space for a Red Hat Enterprise Linux 6 installation and for the procedures in this guide.Open virt-manager and start the wizard

Open virt-manager by executing the virt-manager command as root or opening Applications -> System Tools -> Virtual Machine Manager.

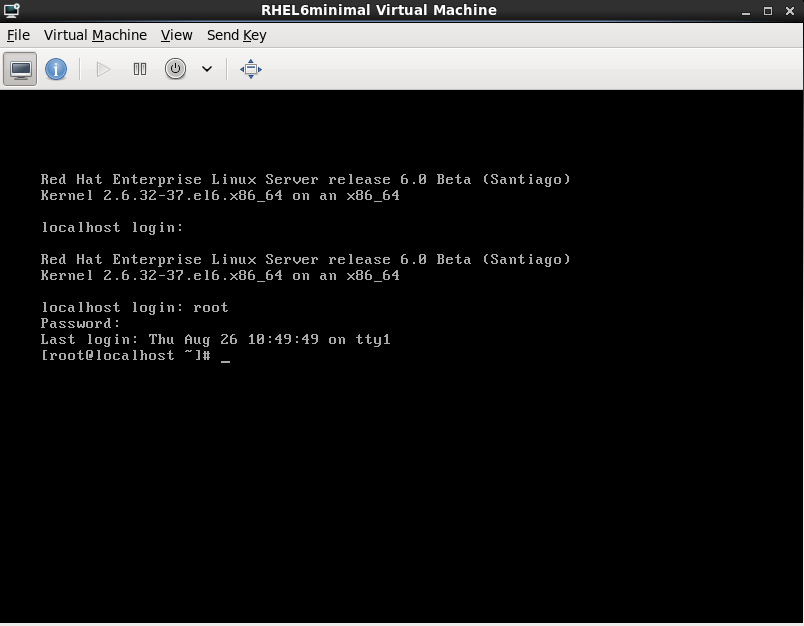

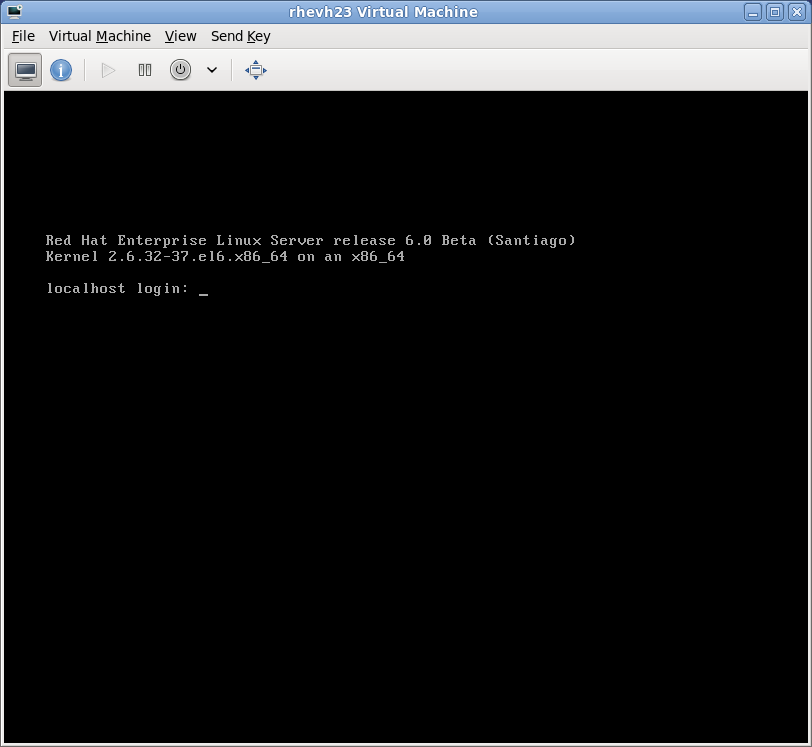

Figure 7.1. The main virt-manager window

Press the (see figure Figure 7.2, “The create new virtualized guest button”) to start the new virtualized guest wizard.

Figure 7.2. The create new virtualized guest button

The Create a new virtual machine window opens.Name the virtualized guest

Guest names can contain letters, numbers and the following characters: '_', '.' and '-'. Guest names must be unique for migration.Choose the Local install media (ISO image or CDROM) radio button.

Figure 7.3. The Create a new virtual machine window - Step 1

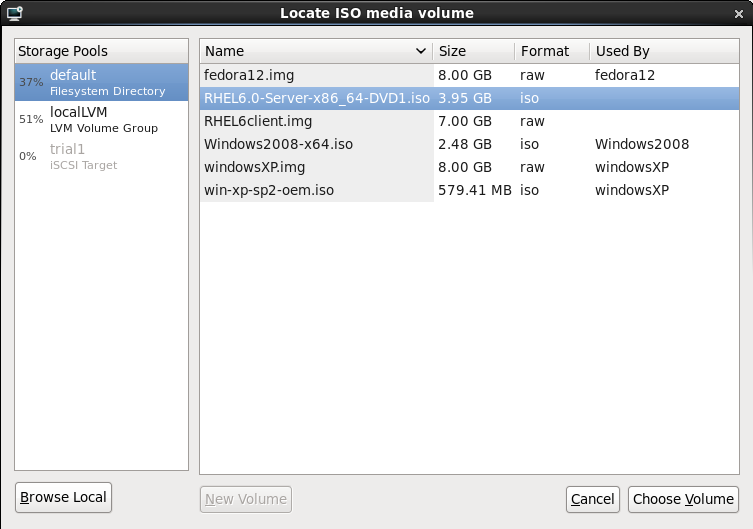

Press to continue.Select the installation media

Select the installation ISO image location or a DVD drive with the installation disc inside. This example uses an ISO file image of the Red Hat Enterprise Linux 6.0 installation DVD image.

Figure 7.4. The Locate ISO media volume window

Image files and SELinux

For ISO image files and guest storage images, the recommended directory to use is the/var/lib/libvirt/images/directory. Any other location may require additional configuration for SELinux, refer to Section 16.2, “SELinux and virtualization” for details.Select the operating system type and version which match the installation media you have selected.

Figure 7.5. The Create a new virtual machine window - Step 2

Press to continue.Set RAM and virtual CPUs

Choose appropriate values for the virtualized CPUs and RAM allocation. These values affect the host's and guest's performance. Memory and virtualized CPUs can be overcommitted, for more information on overcommitting refer to Chapter 20, Overcommitting with KVM.Virtualized guests require sufficient physical memory (RAM) to run efficiently and effectively. Red Hat supports a minimum of 512MB of RAM for a virtualized guest. Red Hat recommends at least 1024MB of RAM for each logical core.Assign sufficient virtual CPUs for the virtualized guest. If the guest runs a multithreaded application, assign the number of virtualized CPUs the guest will require to run efficiently.You cannot assign more virtual CPUs than there are physical processors (or hyper-threads) available on the host system. The number of virtual CPUs available is noted in the Up toXavailable field.

Figure 7.6. The Create a new virtual machine window - Step 3

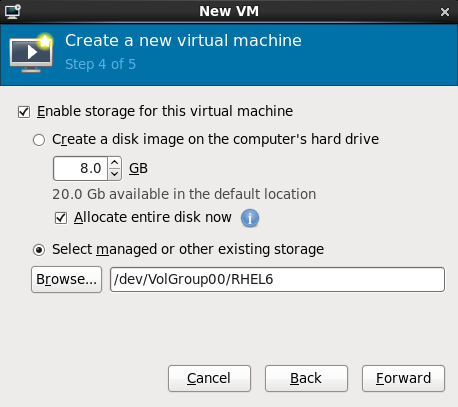

Press to continue.Storage

Enable and assign storage for the Red Hat Enterprise Linux 6 guest. Assign at least 5GB for a desktop installation or at least 1GB for a minimal installation.Migration

Live and offline migrations require guests to be installed on shared network storage. For information on setting up shared storage for guests refer to Part V, “Virtualization storage topics”.With the default local storage

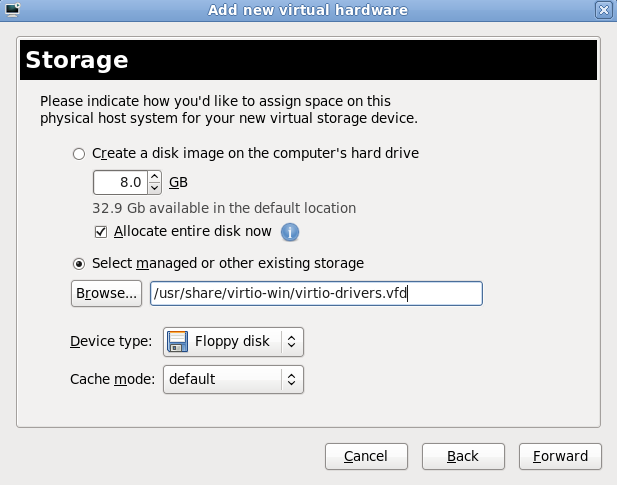

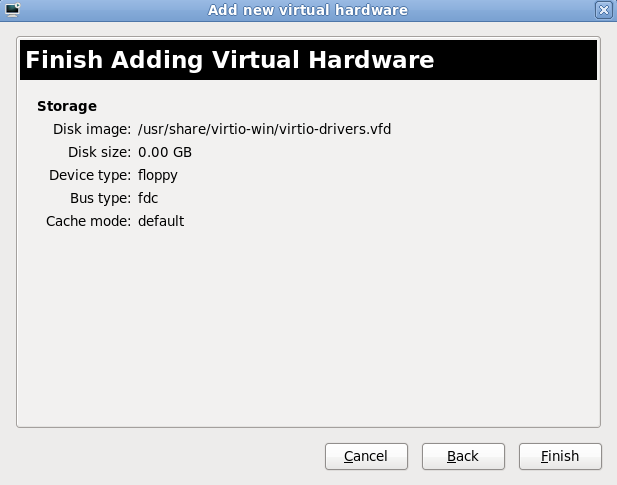

Select the Create a disk image on the computer's hard drive radio button to create a file-based image in the default storage pool, the/var/lib/libvirt/images/directory. Enter the size of the disk image to be created. If the Allocate entire disk now check box is selected, a disk image of the size specified will be created immediately. If not, the disk image will grow as it becomes filled.

Figure 7.7. The Create a new virtual machine window - Step 4

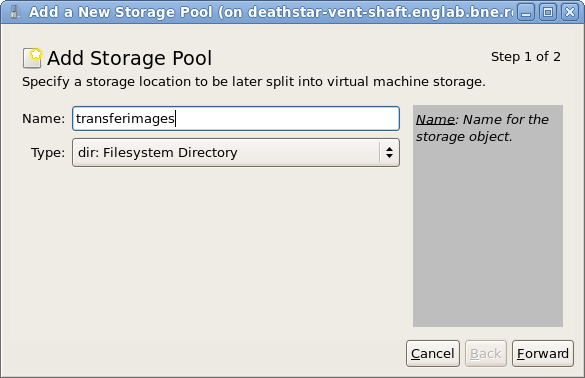

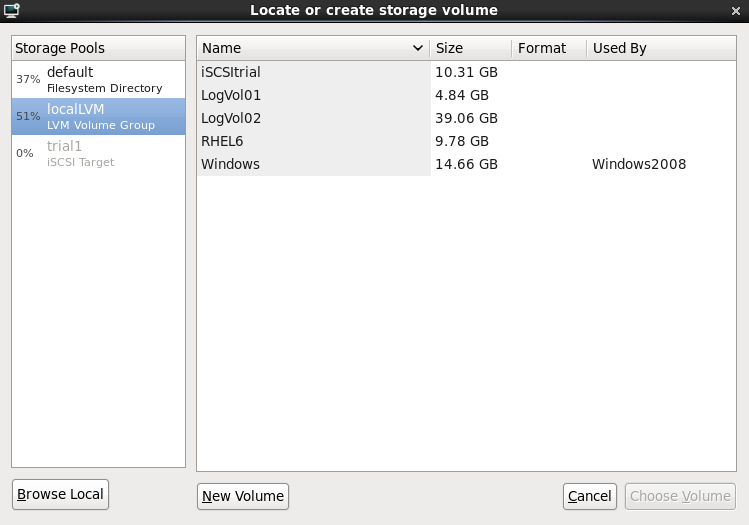

With a storage pool

Select Select managed or other existing storage to use a storage pool.

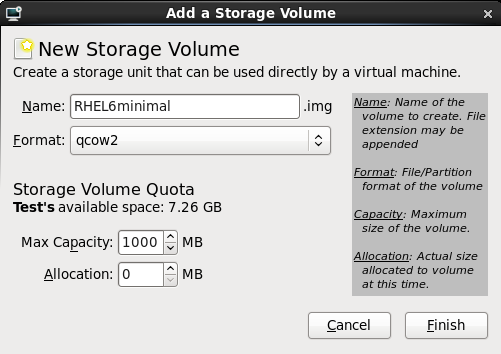

Figure 7.8. The Locate or create storage volume window

- Press the browse button to open the storage pool browser.

- Select a storage pool from the Storage Pools list.

- Optional: Press the New Volume button to create a new storage volume. Enter the name of the new storage volume.

- Press the Choose Volume button to select the volume for the virtualized guest.

Figure 7.9. The Create a new virtual machine window - Step 4

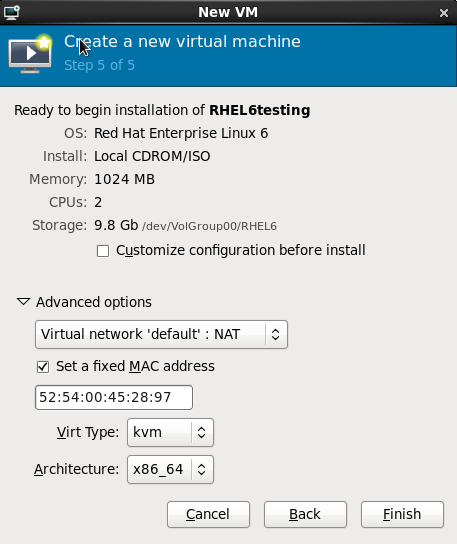

Press to continue.Verify and finish

Verify there were no errors made during the wizard and everything appears as expected.Select the Customize configuration before install check box to change the guest's storage or network devices, to use the para-virtualized drivers or, to add additional devices.Press the Advanced options down arrow to inspect and modify advanced options. For a standard Red Hat Enterprise Linux 6 none of these options require modification.

Figure 7.10. The Create a new virtual machine window - Step 5

Press to continue into the Red Hat Enterprise Linux installation sequence. For more information on installing Red Hat Enterprise Linux 6 refer to the Red Hat Enterprise Linux 6 Installation Guide.

7.2. Creating a Red Hat Enterprise Linux 6 guest with a network installation tree

Procedure 7.2. Creating a Red Hat Enterprise Linux 6 guest with virt-manager

Optional: Preparation

Prepare the storage environment for the virtualized guest. For more information on preparing storage, refer to Part V, “Virtualization storage topics”.Note

Various storage types may be used for storing virtualized guests. However, for a guest to be able to use migration features the guest must be created on networked storage.Red Hat Enterprise Linux 6 requires at least 1GB of storage space. However, Red Hat recommends at least 5GB of storage space for a Red Hat Enterprise Linux 6 installation and for the procedures in this guide.Open virt-manager and start the wizard

Open virt-manager by executing the virt-manager command as root or opening Applications -> System Tools -> Virtual Machine Manager.

Figure 7.11. The main virt-manager window

Press the (see figure Figure 7.12, “The create new virtualized guest button”) to start the new virtualized guest wizard.

Figure 7.12. The create new virtualized guest button

The Create a new virtual machine window opens.Name the virtualized guest

Guest names can contain letters, numbers and the following characters: '_', '.' and '-'. Guest names must be unique for migration.Choose the installation method from the list of radio buttons.

Figure 7.13. The Create a new virtual machine window - Step 1

Press to continue.

7.3. Creating a Red Hat Enterprise Linux 6 guest with PXE

Procedure 7.3. Creating a Red Hat Enterprise Linux 6 guest with virt-manager

Optional: Preparation

Prepare the storage environment for the virtualized guest. For more information on preparing storage, refer to Part V, “Virtualization storage topics”.Note

Various storage types may be used for storing virtualized guests. However, for a guest to be able to use migration features the guest must be created on networked storage.Red Hat Enterprise Linux 6 requires at least 1GB of storage space. However, Red Hat recommends at least 5GB of storage space for a Red Hat Enterprise Linux 6 installation and for the procedures in this guide.Open virt-manager and start the wizard

Open virt-manager by executing the virt-manager command as root or opening Applications -> System Tools -> Virtual Machine Manager.

Figure 7.14. The main virt-manager window

Press the (see figure Figure 7.15, “The create new virtualized guest button”) to start the new virtualized guest wizard.

Figure 7.15. The create new virtualized guest button

The Create a new virtual machine window opens.Name the virtualized guest

Guest names can contain letters, numbers and the following characters: '_', '.' and '-'. Guest names must be unique for migration.Choose the installation method from the list of radio buttons.

Figure 7.16. The Create a new virtual machine window - Step 1

Press to continue.

Chapter 8. Installing Red Hat Enterprise Linux 6 as a para-virtualized guest on Red Hat Enterprise Linux 5

Important note on para-virtualization

8.1. Using virt-install

virt-install command. For instructions on virt-manager, refer to the procedure in Section 8.2, “Using virt-manager”.

Automating with virt-install

virt-install tool. The --vnc option shows the graphical installation. The name of the guest in the example is rhel6PV, the disk image file is rhel6PV.img and a local mirror of the Red Hat Enterprise Linux 6 installation tree is http://example.com/installation_tree/RHEL6-x86/. Replace those values with values for your system and network.

# virt-install --namerhel6PV\ --disk /var/lib/libvirt/images/rhel6PV.img,size=5 \ --vnc --paravirt --vcpus=2 --ram=1024 \ -location=http://example.com/installation_tree/RHEL6-x86/

http://example.com/kickstart/ks.cfg, to fully automate the installation.

# virt-install --namerhel6PV\ --disk /var/lib/libvirt/images/rhel6PV.img,size=5 \ --nographics --paravirt --vcpus=2 --ram=1024 \ -location=http://example.com/installation_tree/RHEL6-x86/ \ -x "ks=http://example.com/kickstart/ks.cfg"

8.2. Using virt-manager

Procedure 8.1. Creating a para-virtualized Red Hat Enterprise Linux 6 guest with virt-manager

Open virt-manager

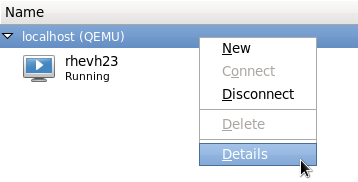

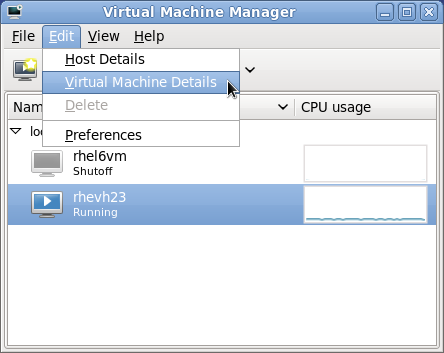

Startvirt-manager. Launch the application from the menu and submenu. Alternatively, run thevirt-managercommand as root.Select the hypervisor

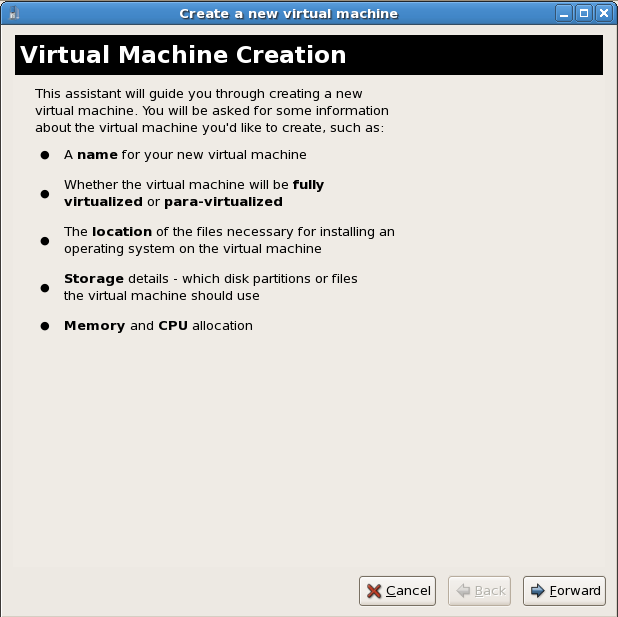

Select the hypervisor. Note that presently the KVM hypervisor is namedqemu.Connect to a hypervisor if you have not already done so. Open the File menu and select the Add Connection... option. Refer to Section 31.5, “Adding a remote connection”.Once a hypervisor connection is selected the New button becomes available. Press the New button.Start the new virtual machine wizard

Pressing the New button starts the virtual machine creation wizard.Press to continue.

Name the virtual machine

Provide a name for your virtualized guest. The following punctuation and whitespace characters are permitted for '_', '.' and '-' characters.Press to continue.

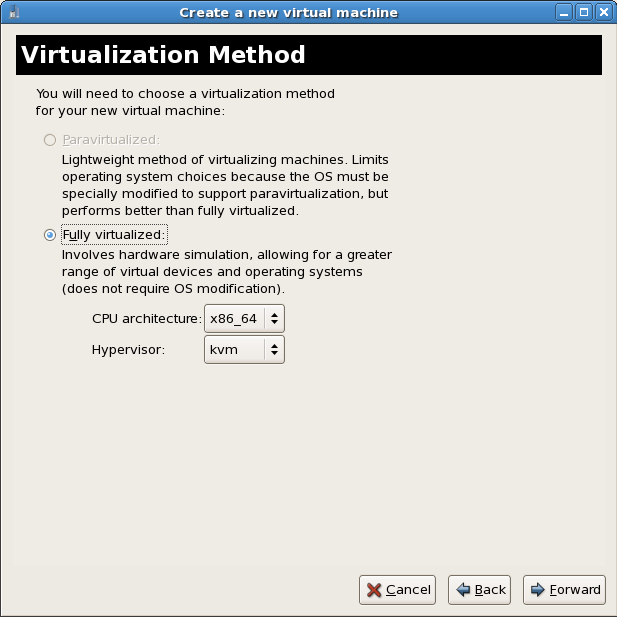

Choose a virtualization method

Select Xen para-virtualized as the virtualization method. Press to continue.

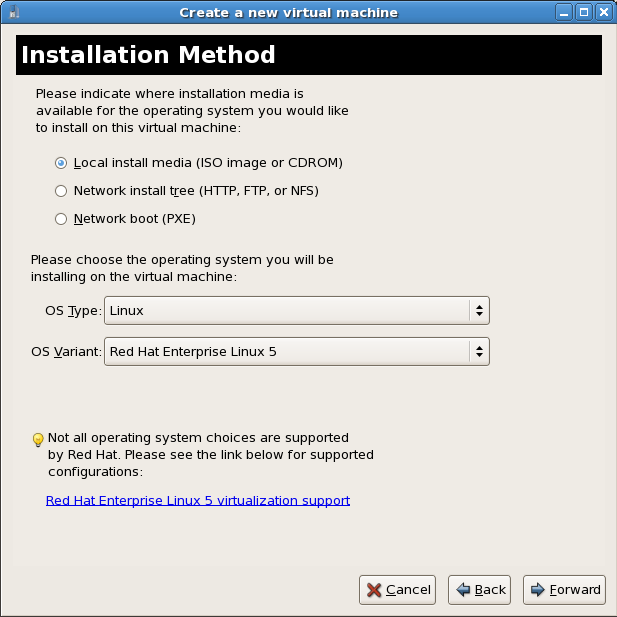

Press to continue.Select the installation method

Red Hat Enterprise Linux can be installed using one of the following methods:- local install media, either an ISO image or physical optical media.

- Select Network install tree if you have the installation tree for Red Hat Enterprise Linux hosted somewhere on your network via HTTP, FTP or NFS.

- PXE can be used if you have a PXE server configured for booting Red Hat Enterprise Linux installation media. Configuring a sever to PXE boot a Red Hat Enterprise Linux installation is not covered by this guide. However, most of the installation steps are the same after the media boots.

Set OS Type to Linux and OS Variant to Red Hat Enterprise Linux 5 as shown in the screenshot.Press to continue.

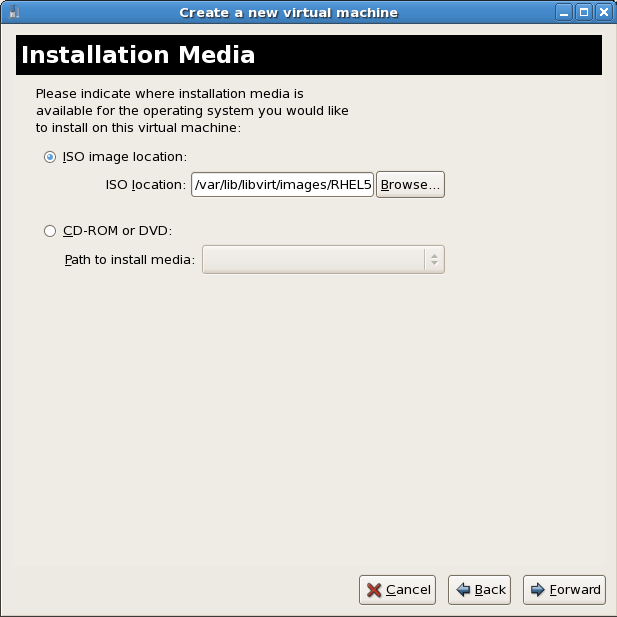

Locate installation media

Select ISO image location or CD-ROM or DVD device. This example uses an ISO file image of the Red Hat Enterprise Linux installation DVD.- Press the button.

- Search to the location of the ISO file and select the ISO image. Press to confirm your selection.

- The file is selected and ready to install.Press to continue.

Image files and SELinux

For ISO image files and guest storage images it is recommended to use the/var/lib/libvirt/images/directory. Any other location may require additional configuration for SELinux, refer to Section 16.2, “SELinux and virtualization” for details.Storage setup

Assign a physical storage device (Block device) or a file-based image (File). File-based images should be stored in the/var/lib/libvirt/images/directory to satisfy default SELinux permissions. Assign sufficient space for your virtualized guest and any applications the guest requires.Press to continue.

Migration

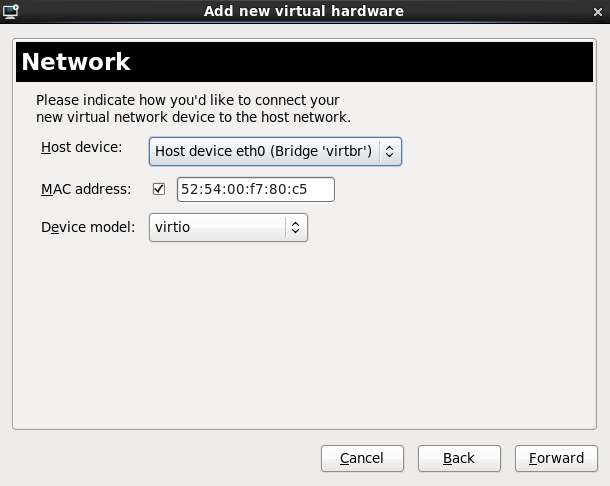

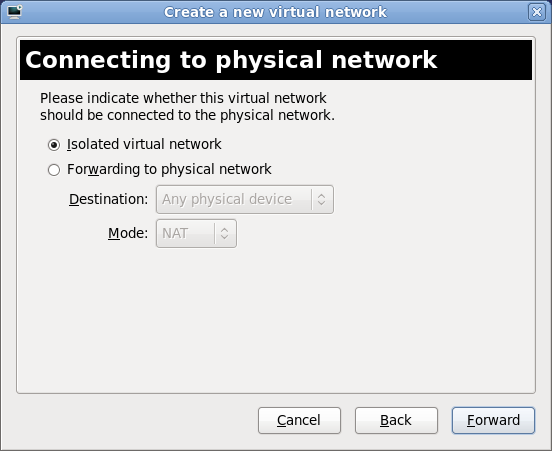

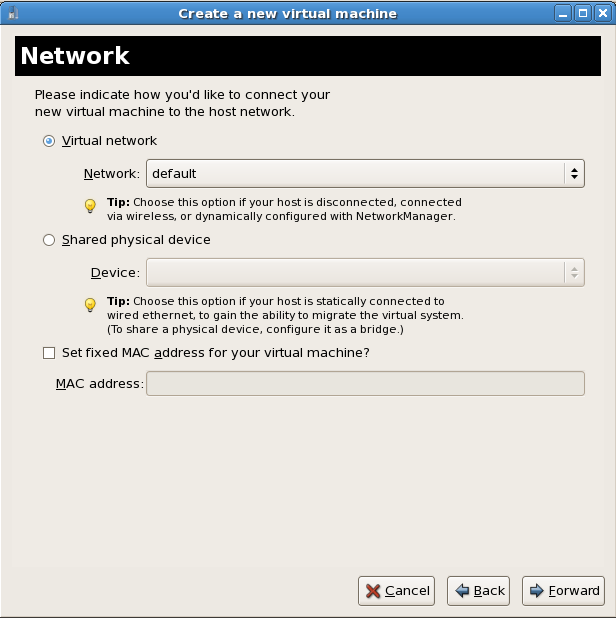

Live and offline migrations require guests to be installed on shared network storage. For information on setting up shared storage for guests refer to Part V, “Virtualization storage topics”.Network setup

Select either Virtual network or Shared physical device.The virtual network option uses Network Address Translation (NAT) to share the default network device with the virtualized guest. Use the virtual network option for wireless networks.The shared physical device option uses a network bond to give the virtualized guest full access to a network device.Press to continue.

Memory and CPU allocation

The Memory and CPU Allocation window displays. Choose appropriate values for the virtualized CPUs and RAM allocation. These values affect the host's and guest's performance.Virtualized guests require sufficient physical memory (RAM) to run efficiently and effectively. Choose a memory value which suits your guest operating system and application requirements. Remember, guests use physical RAM. Running too many guests or leaving insufficient memory for the host system results in significant usage of virtual memory and swapping. Virtual memory is significantly slower which causes degraded system performance and responsiveness. Ensure you allocate sufficient memory for all guests and the host to operate effectively.Assign sufficient virtual CPUs for the virtualized guest. If the guest runs a multithreaded application, assign the number of virtualized CPUs the guest will require to run efficiently. Do not assign more virtual CPUs than there are physical processors (or hyper-threads) available on the host system. It is possible to over allocate virtual processors, however, over allocating has a significant, negative effect on guest and host performance due to processor context switching overheads.Press to continue.

Verify and start guest installation

Verify the configuration.Press to start the guest installation procedure.

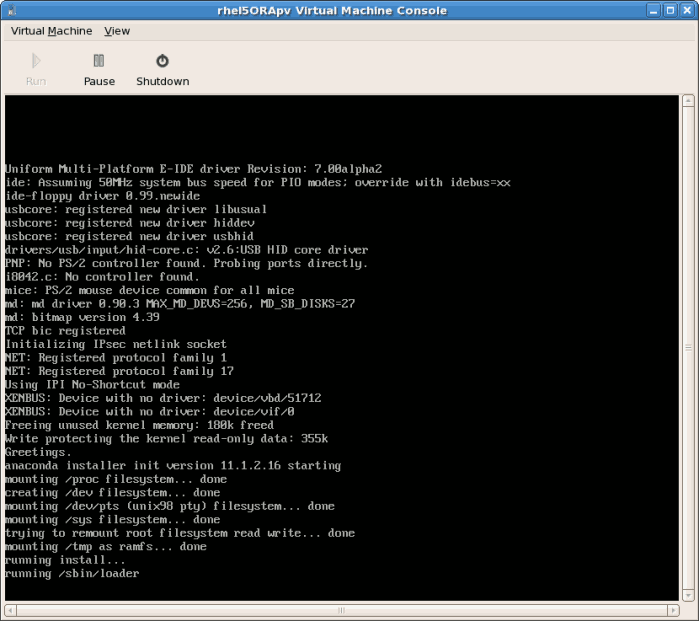

Installing Red Hat Enterprise Linux

Complete the Red Hat Enterprise Linux installation sequence. The installation sequence is covered by the Red Hat Enterprise Linux 6 Installation Guide. Refer to Red Hat Documentation for the Red Hat Enterprise Linux 6 Installation Guide.

Chapter 9. Installing a fully-virtualized Windows guest

virt-install), launch the operating system's installer inside the guest, and access the installer through virt-viewer.

virt-viewer tool. This tool allows you to display the graphical console of a virtual machine (via the VNC protocol). In doing so, virt-viewer

allows you to install a fully virtualized guest's operating system

through that operating system's installer (e.g. the Windows XP

installer).

- Creating the guest (using either

virt-installorvirt-manager) - Installing the Windows operating system on the guest (through

virt-viewer)

9.1. Using virt-install to create a guest

virt-install command allows you to

create a fully-virtualized guest from a terminal, i.e. without a GUI. If

you prefer to use a GUI instead, refer to Section 6.3, “Creating guests with virt-manager” for instructions on how to use virt-manager.

Important

# virt-install \ --name=guest-name\ --network network=default \ --disk path=path-to-disk\ --disk size=disk-size\ --cdrom=path-to-install-disk\ --vnc --ram=1024

path-to-disk/dev/sda3) or image file (/var/lib/libvirt/images/name.img). It must also have enough free space to support the disk-sizeImportant

/var/lib/libvirt/images/. Other directory locations for file-based images are prohibited by SELinux. If you run SELinux in enforcing mode, refer to Section 16.2, “SELinux and virtualization” for more information on installing guests.

virt-install interactively. To do so, use the --prompt command, as in:

# virt-install --prompt

virt-viewer

will launch the guest and run the operating system's installer. Refer

to to the relevant Microsoft installation documentation for instructions

on how to install the operating system.

Important

virt-viewer launches, press F5 and refer to Section 9.2, “Installing Windows 2003” for further instructions before proceeding.

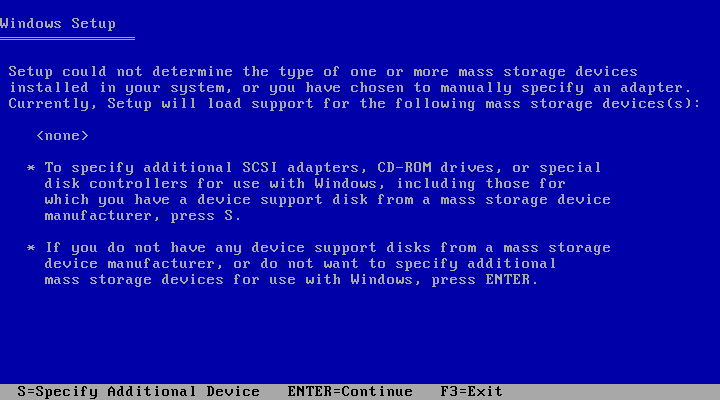

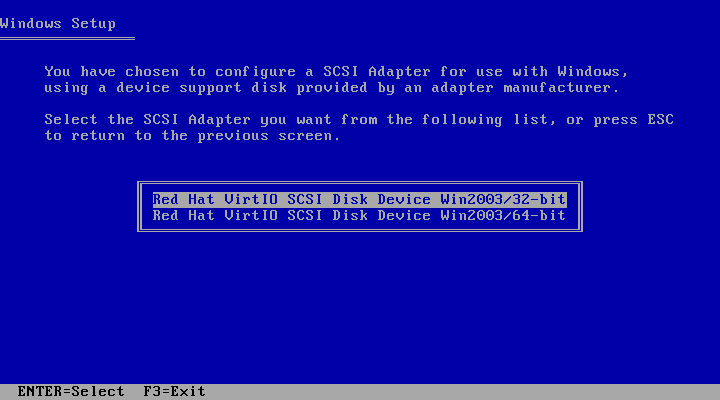

9.2. Installing Windows 2003

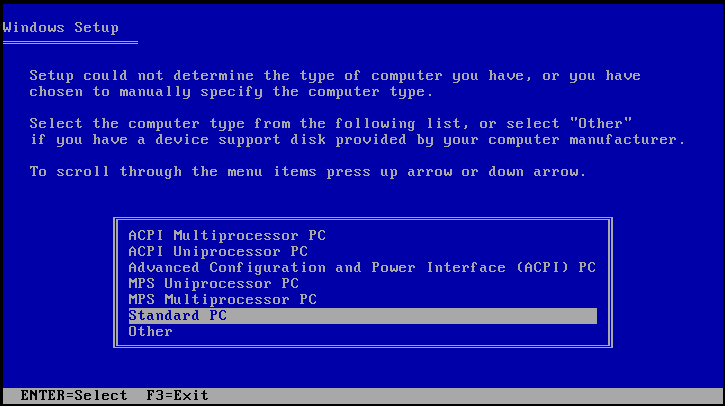

virt-viewer launches and boots the installer, press F5. If you do not press F5 at the right time you will need to restart the installation. Pressing F5 allows you to select a different HAL or Computer Type.

Figure 9.1. Selecting a different HAL

Standard PC as the Computer Type. Then, press Enter to continue with the installation process.